Story

Sales Research Agent and Sales Research Bench

Key takeaway

Enterprises can now use an AI agent to quickly find sales data insights, rather than manually searching through CRM systems. This makes sales teams more efficient and helps leaders make better-informed decisions.

Quick Explainer

The Sales Research Agent is an AI-powered tool that automatically generates insightful business research by connecting to live CRM data and other sources. It uses a multi-agent, multi-model architecture to provide high-quality insights through text-narratives and data visualizations. To evaluate such AI solutions, Microsoft created the Sales Research Bench - a specialized benchmark with real-world business questions across key quality dimensions like relevance, explainability, and visualization quality. In head-to-head tests, the Sales Research Agent outperformed other leading AI models, demonstrating its ability to handle complex, customized enterprise data and deliver trustworthy, actionable insights for sales leaders.

Deep Dive

Technical Deep Dive: Sales Research Agent and Sales Research Bench

Overview

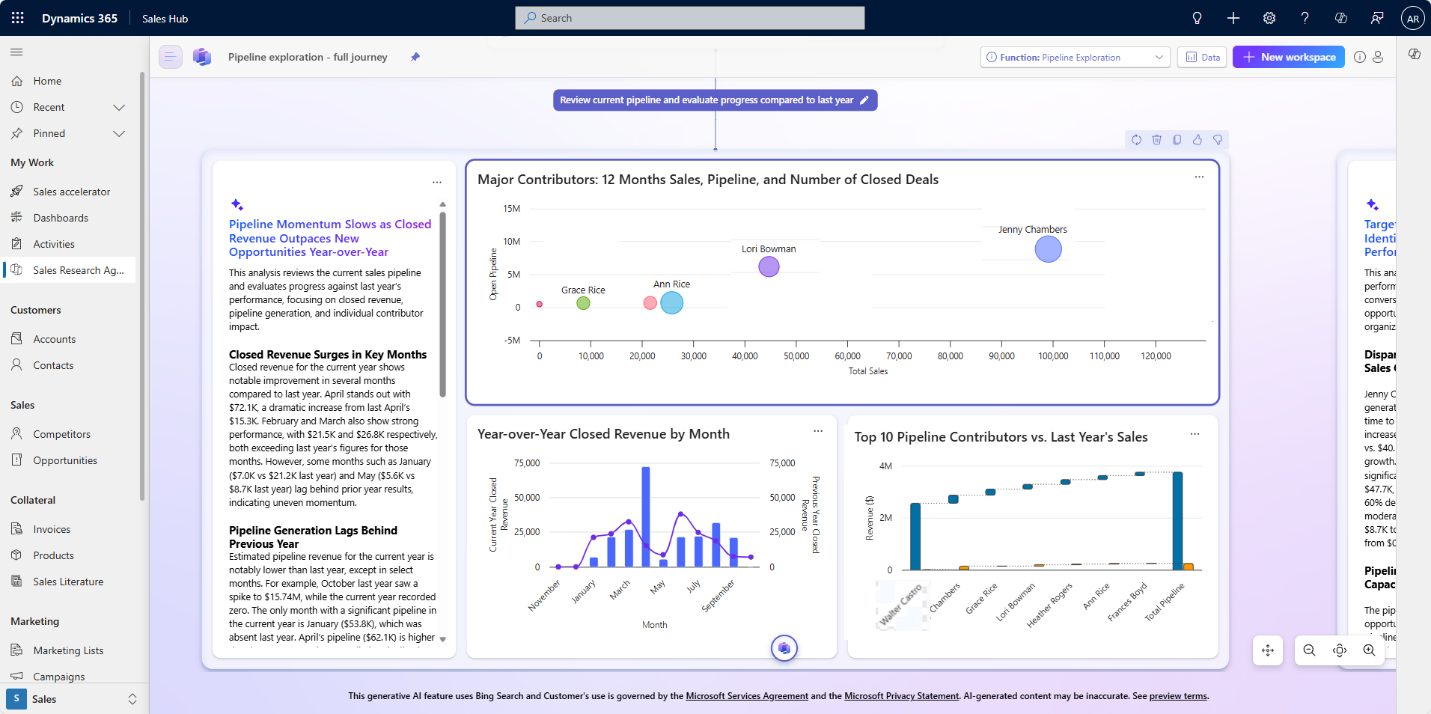

The Sales Research Agent in Microsoft's Dynamics 365 Sales is an AI-powered tool that automatically connects to live CRM data and other data sources to produce decision-ready insights through text-based narratives and data visualizations. It uses a multi-agent, multi-model architecture to provide high-quality business research at scale.

To evaluate the Sales Research Agent and other AI solutions, Microsoft created the Sales Research Bench - a purpose-built benchmark with 200 real-world business questions across 8 weighted quality dimensions, including text/chart groundedness, relevance, explainability, schema accuracy, and visualization quality. In head-to-head tests, the Sales Research Agent outperformed Claude Sonnet 4.5 and ChatGPT-5.

Problem & Context

Sales leaders need AI-powered tools that can quickly generate rich research insights from complex, customized business data. However, the market is crowded with AI offerings of varying quality, making it difficult for customers to evaluate which solutions are truly enterprise-ready.

Methodology

- Microsoft developed the Sales Research Bench, a composite benchmark with 200 real-world business research questions covering 8 weighted quality dimensions:

- Text Groundedness (25%)

- Chart Groundedness (25%)

- Text Relevance (13%)

- Explainability (12%)

- Schema Accuracy (10%)

- Chart Relevance (5%)

- Chart Fit (5%)

- Chart Clarity (5%)

- The evaluation dataset used a customized enterprise data schema reflecting real-world complexity.

- The Sales Research Agent, Claude Sonnet 4.5, and ChatGPT-5 were each evaluated using the Sales Research Bench, with their responses scored by LLM judges.

Data & Experimental Setup

- The benchmark dataset included 200 business research questions relevant to sales leaders, e.g.:

- "Looking at closed opportunities, which sellers have the largest gap between Total Actual Sales and Est Value First Year in the 'Corporate Offices' Business Segment?"

- "Are our sales efforts concentrated on specific industries or spread evenly across industries?"

- "Compared to my headcount on paper (30), how many people are actually in seat and generating pipeline?"

- The dataset used a customized enterprise schema with over 1,000 tables and 200 columns per table, reflecting real-world complexity.

- The Sales Research Agent accessed the data natively through its multi-agent architecture. Claude and ChatGPT accessed the same data through an Azure SQL connector.

Results

- In the 200-question evaluation, the Sales Research Agent achieved a composite score of 78.2 on a 100-point scale.

- In comparison, Claude Sonnet 4.5 scored 65.2 and ChatGPT-5 scored 54.1.

- The Sales Research Agent outperformed the other solutions across all 8 quality dimensions, with the largest advantages in chart-related metrics.

Interpretation

- The Sales Research Agent's multi-agent, multi-model architecture allows it to outperform other AI solutions in handling complex, customized enterprise data and delivering high-quality business insights.

- Its schema intelligence, self-correction mechanisms, and explainability features give sales leaders confidence in the trustworthiness and reliability of its outputs.

- The Sales Research Bench provides a much-needed framework for evaluating the enterprise readiness of AI research tools, going beyond generic benchmarks to assess the specific needs of sales leaders.

Limitations & Uncertainties

- The evaluation was limited to 200 questions - a larger, more diverse dataset could uncover additional strengths or weaknesses.

- The customized schema, while representative of real-world complexity, may not capture every possible edge case.

- The subjective nature of some quality dimensions (e.g., explainability, chart fit) introduces some inherent uncertainty in the scoring.

What Comes Next

- Microsoft will continue using the Sales Research Bench to drive ongoing improvements to the Sales Research Agent.

- The full evaluation package will be published, allowing customers to verify the results or benchmark their own AI research solutions.

- Microsoft plans to develop similar evaluation frameworks for other business functions, setting a new standard for trust and transparency in enterprise AI.

Sources: