Story

AI-CARE: Carbon-Aware Reporting Evaluation Metric for AI Models

Key takeaway

Researchers have developed a new metric called AI-CARE to evaluate the carbon footprint of AI models, which is important as machine learning grows more energy-intensive.

Quick Explainer

AI-CARE is a framework that evaluates machine learning models not just on their predictive performance, but also their energy consumption and carbon emissions. By jointly reporting these metrics, it enables practitioners to make informed, sustainability-aware decisions when selecting models for deployment. The framework measures energy usage through analytical estimation and empirical monitoring, then derives carbon impact from energy data and a fixed grid intensity factor. This provides a structured, deployment-relevant view of the efficiency tradeoffs between model accuracy and environmental cost, which is a novel contribution compared to conventional performance-only benchmarking.

Deep Dive

Technical Deep Dive: AI-CARE

Overview

AI-CARE is a standardized framework for carbon-aware evaluation of machine learning models. It reports predictive performance alongside energy consumption and derived carbon emissions, enabling transparent and reproducible analysis of deployment-relevant efficiency trade-offs.

Problem & Context

Existing ML benchmarks focus narrowly on predictive performance metrics like accuracy, BLEU, or mAP, while largely ignoring the environmental cost of model training and inference. This single-objective evaluation paradigm is increasingly misaligned with real-world deployment requirements, particularly in energy-constrained environments.

Without explicit reporting of energy and carbon impacts, practitioners lack the information needed to make informed, sustainability-aware model selection decisions. Current tooling primarily reports raw measurements without prescribing how such data should be normalized, compared, or interpreted alongside task performance.

Methodology

AI-CARE is designed as a reporting and analysis framework that integrates accuracy, energy, and carbon metrics in a coherent and interpretable way. Key features:

- Reporting-centric: Focuses on standardized reporting rather than model optimization or control

- Low overhead: Performs energy monitoring and carbon-emission retrieval asynchronously to avoid interfering with model execution

- Interpretable: Provides normalization, aggregation, and visualization mechanisms (e.g., carbon–accuracy tradeoff curves, scalar carbon-aware scores)

The framework models energy consumption using FLOPs, memory accesses, and static power, supporting both analytical estimation and empirical measurement. Carbon emissions are derived from energy usage and a fixed grid intensity.

Data & Experimental Setup

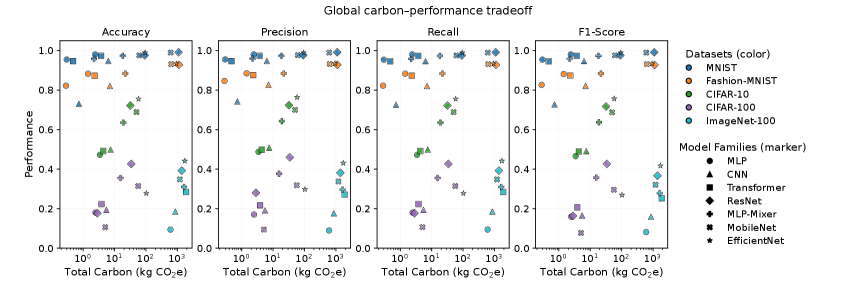

Experiments were conducted on five computer vision benchmarks: MNIST, Fashion-MNIST, CIFAR-10, CIFAR-100, and ImageNet-100. Representative architectures spanning MLPs, CNNs, transformers, and MLP-Mixers were evaluated under consistent training settings.

Inference-time carbon emissions were computed using a fixed grid carbon intensity of 400 gCO2/kWh, consistent with recent global average estimates.

Results

The carbon–performance tradeoff plots reveal consistent, nonlinear efficiency disparities:

- Incremental gains in predictive performance often require disproportionately higher carbon expenditure, particularly as dataset complexity increases.

- Deeper or more computationally intensive architectures cluster in high-emission regions, offering only marginal performance improvements over lightweight alternatives.

- Models with comparable accuracy can exhibit non-trivial differences in precision, recall, or F1, while differing by orders of magnitude in total carbon emissions.

The scalar carbon-aware scores complement this geometric analysis, exposing metric-dependent variations in model rankings and highlighting architectures that achieve favorable performance–emissions balance across datasets.

Interpretation

The combined results demonstrate that carbon-aware evaluation fundamentally reshapes model assessment compared to conventional performance-only benchmarking. Sustainability should be treated as a first-class evaluation dimension, as energy and carbon impact materially influence empirical conclusions about model quality.

By jointly reporting predictive performance, energy consumption, and carbon emissions, AI-CARE reveals efficiency trade-offs that remain obscured under accuracy-centric evaluation. The framework provides structured, deployment-relevant evidence that carbon-aware reporting is necessary to support informed, sustainability-aware model selection.

Limitations & Uncertainties

- The study uses a fixed grid carbon intensity value, which may not reflect real-time or location-specific variations.

- Only a limited set of model families and vision benchmarks were evaluated; results may differ for other domains or architectures.

- The framework does not yet integrate hardware-specific energy measurements or context-dependent carbon factors.

What Comes Next

Future work aims to:

- Enhance the framework with hardware-specific energy monitoring and dynamic grid carbon intensity models

- Expand the evaluation to broader domains beyond computer vision

- Investigate the integration of carbon-aware reporting into existing ML benchmarking and optimization workflows

Sources: