Story

AI-Assisted Decision Making with Human Learning

Key takeaway

AI systems can now assist human decision-making, but humans still make the final call. This could help experts like doctors make more informed decisions, with AI providing recommendations.

Quick Explainer

The core idea is to design AI algorithms that assist human decision-makers by selecting the most informative features for the human to observe, while accounting for the human's capacity to learn from these interactions over time. The algorithm must balance providing information that aligns with the human's current understanding versus information that is more informative but harder to interpret. A key insight is that optimal algorithmic strategies have a surprisingly clean combinatorial structure, allowing for efficient computation. The analysis also reveals that as the algorithm becomes more "patient" (prioritizes long-term learning) or the human learns more efficiently, the algorithm selects more informative feature subsets to promote deeper understanding.

Deep Dive

Technical Deep Dive: AI-Assisted Decision Making with Human Learning

Overview

This work studies algorithms that assist human decision-makers in settings where the human learns from repeated interactions with the algorithm. The key contributions are:

- Identification of two fundamental tradeoffs in AI-assisted human decision-making:

- The "informativeness vs. divergence" tradeoff in selecting informative features for the human to use

- The "fixed vs. growth mindset" tradeoff in balancing short-term performance vs. long-term learning

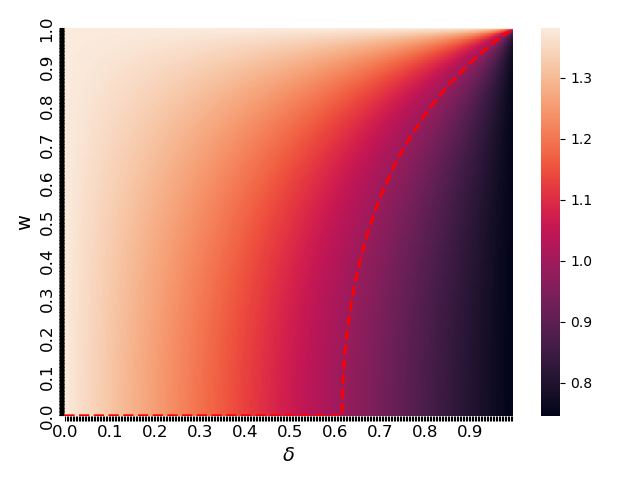

- A stylized model that reveals how the algorithm's patience (time preferences) and the human's learning efficiency influence the fixed vs. growth mindset tradeoff

- Analysis showing that optimal algorithmic strategies have a surprisingly clean combinatorial structure, allowing for efficient computation

- Insights on when the algorithm prioritizes selecting more informative feature subsets that promote human learning, versus less informative but initially less divergent subsets

Problem & Context

AI systems are increasingly used to assist human decision-makers in domains like healthcare, criminal justice, and finance. However, the final decision often remains with the human, who may have imperfect understanding of the underlying relationships.

This paper studies AI-assisted decision-making scenarios where the human decision-maker learns from experience in repeated interactions with the algorithm. The algorithm's goal is to select the most informative features for the human to use, balancing the tradeoff between providing information that aligns with the human's current understanding versus information that is more informative but harder for the human to interpret.

Methodology

The authors model this problem as follows:

- The true outcome

yis a linear function ofnfeaturesx = {x_1, ..., x_n}:y = c + Σ a_i x_i - The human starts with initial beliefs

h_i,0about each feature's coefficient - At each step, the algorithm selects a subset

A_tof up tokfeatures for the human to observe - The human makes a prediction based on the observed features and their current beliefs

h_t - The human's beliefs are updated according to a general "φ-convergent" learning rule that satisfies natural properties like initial beliefs, improvement with experience, and asymptotic learning

- The algorithm's objective is to minimize the discounted mean squared error of the human's predictions over time

Results

The key results include:

- Characterization of optimal algorithmic strategies:

- There exists an optimal sequence of feature subsets that is stationary (always selects the same subset)

- An optimal stationary sequence can be computed in O(n log n) time

- Analysis of when the algorithm selects more informative feature subsets:

- As the algorithm's "patience" (weight on future outcomes) increases, it selects more informative feature subsets

- There exists a patience threshold above which the algorithm always selects the most informative feature subset

- As the human learns more efficiently, the algorithm selects more informative feature subsets

- Analysis of algorithmic modeling errors:

- Errors in estimating ground truth coefficients or human coefficients can be bounded by the maximum possible error in a per-feature "value" quantity

- These error margins are asymmetric, with "overshoot" errors potentially having larger impact than "undershoot" errors

Interpretation

The results highlight the importance of modeling human learning when designing algorithms to assist decision-makers. The tradeoffs identified - informativeness vs. divergence, and fixed vs. growth mindset - capture fundamental tensions in this setting.

The clean combinatorial structure of optimal algorithmic strategies is a surprising finding, enabling efficient computation. The insights on when the algorithm prioritizes informativeness over alignment with current beliefs provide guidelines for algorithm design.

The analysis of modeling errors underscores the robustness of this approach - the algorithm's suboptimal choices have bounded impact, since it is the human making the final predictions.

Limitations & Uncertainties

- The model assumes linear relationships and independent features, which may not hold in all real-world domains

- The analysis focuses on the algorithm's strategy, not the human's strategic response to the algorithm's choices

- The paper does not consider cases where the algorithm's and human's incentives are misaligned

What Comes Next

Potential future directions include:

- Exploring alternative performance metrics and learning models beyond linear regression

- Studying settings with correlated features or decision instances over time

- Investigating strategic interactions where the human recognizes the algorithm's optimization

- Extending the analysis to consider misaligned incentives between the algorithm and the human