Story

Revolutionizing Long-Term Memory in AI: New Horizons with High-Capacity and High-Speed Storage

Key takeaway

Researchers developed new memory storage that could revolutionize AI capabilities, allowing more efficient and faster long-term learning for intelligent systems.

Quick Explainer

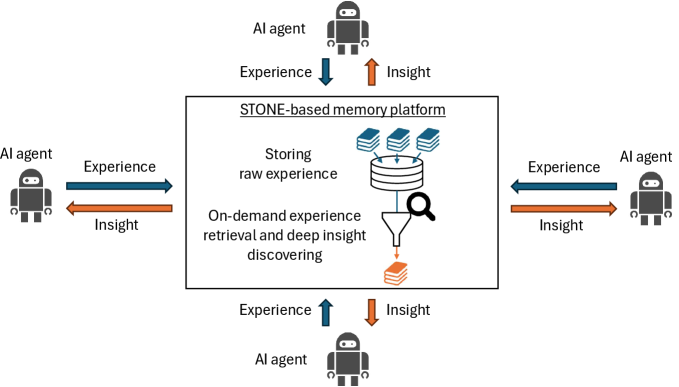

The core idea is to retain full raw experiences in memory, rather than extracting and storing only selected information. This "Store Then On-demand Extract" (STONE) approach avoids information loss and enables flexible reuse of knowledge across diverse future tasks. Additionally, the paper explores "Deeper Insight Discovery" - retrieving and aggregating multiple relevant experiences to uncover underlying statistical patterns, supporting more robust decision-making. Finally, "Experience Memory Sharing" allows efficient collection and sharing of experiences across AI agents, reducing the burden of trial-and-error per individual agent. These approaches aim to enhance the long-term memory capabilities of AI systems critical for achieving artificial superintelligence.

Deep Dive

Revolutionizing Long-Term Memory in AI: New Horizons with High-Capacity and High-Speed Storage

Overview

This technical deep-dive examines novel approaches for enhancing the long-term memory of AI systems, which are critical for achieving artificial superintelligence (ASI). The key ideas highlighted are:

- Store Then On-demand Extract (STONE): Retaining raw experiences in memory, rather than extracting and storing only selected information, to avoid information loss and enable flexible knowledge reuse across diverse tasks.

- Deeper Insight Discovery: Leveraging multiple related experiences to capture underlying statistical patterns, enabling more robust and reliable decision-making in stochastic environments, in contrast to simple experience replay.

- Experience Memory Sharing: Enabling efficient collection and sharing of experiences across multiple AI agents to reduce the burden of trial-and-error per agent.

The paper presents simple experiments demonstrating the advantages of these approaches over conventional methods. It also discusses key technical challenges that have hindered their broader adoption, including storage capacity, inference performance, comprehensive recall, and secure/private memory sharing.

Methodology

The core focus is on non-parametric external memory for AI agents, in contrast to the static parametric memory of large language models (LLMs). The approaches examined target inter-inference memory use, where the goal is to learn from past experiences to improve performance on future tasks, rather than solely improving understanding within a single inference.

Store Then On-demand Extract (STONE)

- In the conventional "extract-then-store" approach, only information deemed immediately useful for the current task is retained, risking the loss of potentially valuable knowledge.

- In contrast, the STONE paradigm stores the full raw experiences, deferring extraction of task-relevant information until it is needed.

- This minimizes information loss and enables more flexible, multifaceted utilization of stored knowledge across diverse future tasks.

Deeper Insight Discovery

- Simple experience replay methods may capture noisy or atypical past events, leading to unreliable decision-making in stochastic environments.

- Deeper insight discovery retrieves and aggregates information from multiple relevant experiences to uncover underlying statistical patterns, supporting more robust and reliable decisions.

Experience Memory Sharing

- Conventional methods rely on individual agents collecting their own experiences, limiting the diversity and volume of stored knowledge.

- Memory sharing across multiple agents enables more efficient experience collection, reducing the per-agent burden of trial-and-error.

Challenges

The paper discusses several key technical challenges that have hindered the broader adoption of these approaches:

- Storage Capacity: Storing raw experiences requires substantially more memory compared to storing only extracted information. Improving compression and storage density is crucial.

- Inference Performance: Extracting task-relevant information from raw experiences during inference can incur significant latency overhead. Techniques like key-value caching may help accelerate this process.

- Comprehensive Recall: Reliably retrieving all relevant experiences for deeper insight discovery is difficult with conventional nearest-neighbor search. Semantic logical search using sparse vectors may be a more suitable approach.

- Machine Learning for Deeper Insights: Accurately estimating value functions from open-ended language action spaces remains a significant challenge for enabling machine learning-based deeper insight discovery.

- Secure and Private Memory Sharing: Enabling large-scale memory sharing while addressing privacy and security concerns, such as preventing data contamination, is an important area for future work.

Conclusion

This technical deep-dive highlights three promising, yet underexplored, directions for enhancing the long-term memory capabilities of AI systems: STONE, deeper insight discovery, and experience memory sharing. The paper demonstrates the potential benefits of these approaches through simple experiments and identifies key technical challenges that must be addressed to enable their broader adoption. Continued research in these areas could significantly advance the development of AI agents capable of continuously and adaptively improving themselves, bringing us closer to the goal of artificial superintelligence.