Story

When Remembering and Planning are Worth it: Navigating under Change

Key takeaway

Remembering past routes and planning ahead can help animals and robots navigate uncertain environments more efficiently, a new AI study found. This insight could aid the development of smarter navigation systems for robots and self-driving cars.

Quick Explainer

The core idea is that agents can navigate changing, uncertain environments more effectively by incorporating both memory and planning capabilities. The key components are: 1) Maintaining a probabilistic map of the environment by continuously updating episodic memories of barrier and food locations, and 2) Using this map to plan paths, while also retaining the ability to explore when the goal location is unknown. This hybrid approach, which combines multiple strategies in a coordinated fashion, outperforms simpler navigation strategies, especially as the environment becomes more complex with larger sizes and higher barrier densities, as long as the overall uncertainty from localization and environmental change remains manageable.

Deep Dive

Overview

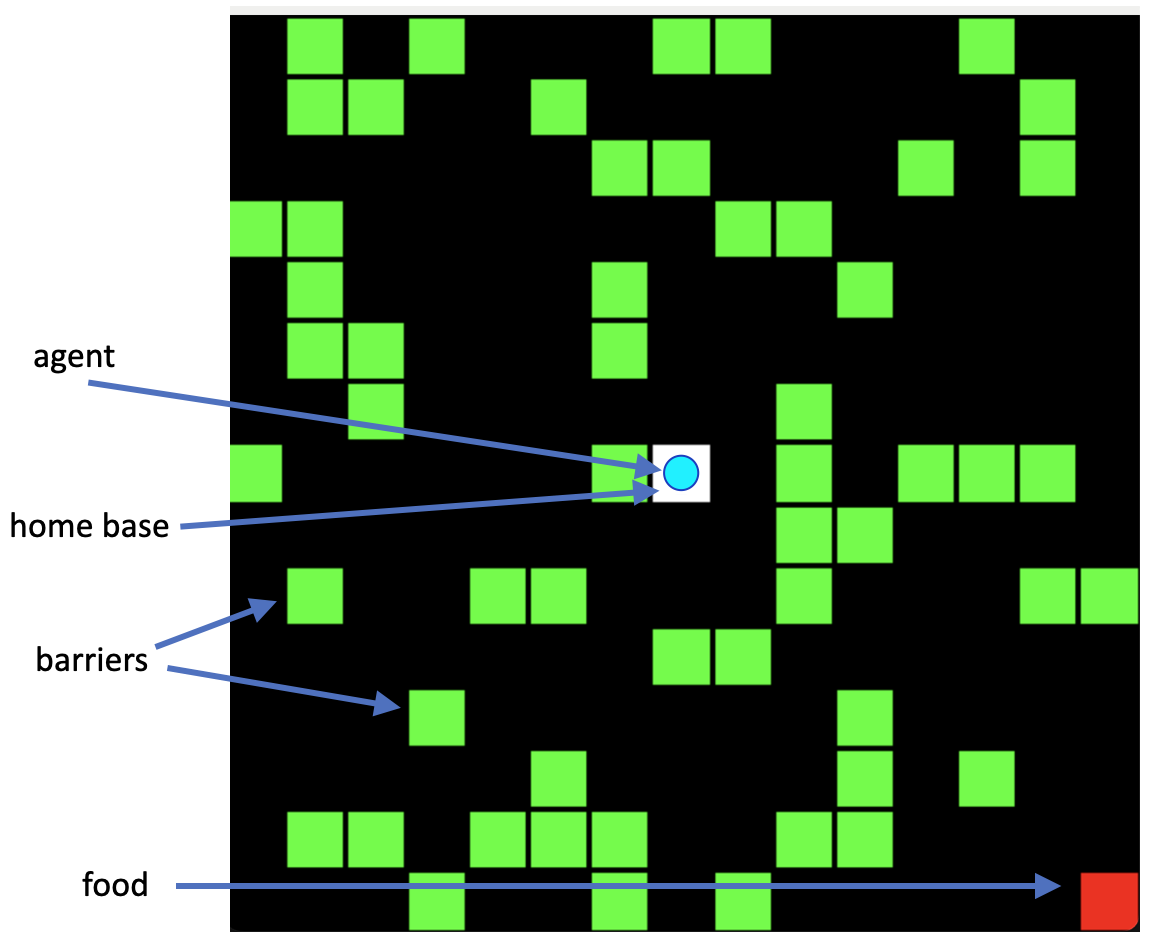

This paper explores how different types and uses of memory can aid spatial navigation in changing, uncertain environments. The authors study a simple foraging task where an agent must navigate from a home location to a food location, with barriers that can change location from day to day. The agent has limited sensing capabilities and must cope with motion noise that introduces localization uncertainty.

The authors compare a range of strategies, from simple to sophisticated, that use various memory and planning capabilities. They find that an architecture that can incorporate multiple strategies is required to handle the different subtasks - exploration when food location is unknown, and planning a path when food location is known. Strategies that utilize non-stationary probability learning techniques to update episodic memories and build maps perform substantially better than simpler agents, especially as task difficulty increases (longer distances, more barriers), as long as the overall uncertainty from localization and environmental change is not too large.

Methodology

The authors study an agent navigating an NxN grid world. Each cell is in one of three states: empty, barrier, or food. The agent can execute four actions: move to an adjacent empty cell. With some probability, the agent's motion can be noisy, resulting in a different outcome than intended.

Time is divided into days, with the agent starting at a fixed home location (0,0) each day and needing to reach the food location, which may change from day to day. Barriers may also appear, disappear, or change location between days.

The authors explore a range of agent strategies:

- Random: Selects a legal move uniformly at random. Has no memory.

- Biased Random: Similar to Random but avoids reversing direction.

- Greedy: Selects the action that greedily reduces distance to the goal, if possible. Requires sensing the direction to the goal.

- Least-Visited: Keeps track of how many times each cell has been visited and prefers the least visited cell.

- Path Memory: Remembers the full path taken the previous day and attempts to replay it.

- Probabilistic Map: Maintains probabilistic predictions of barrier and food locations based on episodic memories, and uses these to plan paths.

The authors also compare to an Oracle agent that has full knowledge of the environment.

The agents' performance is evaluated by the number of steps required to reach the food location each day, averaged over multiple environments and days.

Results

The authors find that:

- Strategies that make use of memory and planning substantially outperform simpler strategies like random and greedy, especially as the task difficulty increases (larger environments, more barriers).

- The advantage of the memory-based strategies grows as the environment size and barrier density increase, as long as the rate of environmental change and motion noise are not too high.

- Continuously updating the agent's probabilistic memories is crucial - freezing the memories after some point leads to degraded performance as the environment continues to change.

- The probabilistic map strategy, which samples barrier locations and food goals from its memory-derived predictions, performs the best overall, but requires the most computational complexity.

- A hybrid approach that combines multiple strategies in a round-robin fashion, with progressive time budgets, can be an effective compromise between simplicity and performance.

Limitations & Uncertainties

- The agents have access to some hard-coded capabilities like path integration for localization. The authors note a goal of reducing these hard-coded assumptions in future work.

- The environments are still relatively simple and closed-world. Extending to more open-ended, realistic environments poses additional challenges.

- The authors do not explore the memory and computational costs of the different strategies in depth.

- The relative performance of the strategies may depend on specific parameter settings (grid size, barrier density, change rates, etc.) that are not fully explored.

What Comes Next

The authors suggest several promising future research directions:

- Reducing hard-coded assumptions and introducing more learning of what to remember and how to use memories.

- Enabling richer perception and spatial understanding to aid localization and navigation.

- Composing multiple skills/tasks beyond just navigation.

- Developing more sophisticated memory management policies that prioritize certain types of memories over others.

- Exploring open-world settings and handling of distributional shifts.

- Investigating how these lessons on memory-aided navigation can generalize to other non-Euclidean spaces and domains.