Story

Deep Reinforcement Learning for Optimal Portfolio Allocation: A Comparative Study with Mean-Variance Optimization

Key takeaway

Researchers compared two ways of allocating investments in a portfolio - a new AI-driven method and a traditional statistical approach. The AI method may provide more optimal portfolio returns, which could help individual investors and fund managers improve their investment strat...

Quick Explainer

The core idea is to apply deep reinforcement learning (DRL) to the problem of optimal portfolio allocation, and compare its performance to the traditional mean-variance optimization (MVO) approach. The DRL approach formulates portfolio management as a Markov decision process, and uses a policy-gradient method to train an agent to optimize for risk-adjusted returns. This allows the DRL strategy to capture complex, nonlinear relationships in the data that MVO may miss. In contrast, the MVO approach estimates asset means and covariances from historical data and solves an optimization problem to obtain portfolio weights. The study finds that the DRL strategy outperforms MVO in terms of returns, risk-adjusted performance, and consistency, likely due to its ability to directly optimize the objective.

Deep Dive

Deep Reinforcement Learning for Optimal Portfolio Allocation: A Comparative Study with Mean-Variance Optimization

Overview

This paper presents a comparison between a Deep Reinforcement Learning (DRL) framework and Mean-Variance Optimization (MVO) for optimal portfolio allocation in the US equities market. The DRL approach uses a policy-gradient-based agent trained to optimize for risk-adjusted returns, while the MVO approach uses historical data to estimate asset means and covariances for portfolio optimization.

Problem & Context

- Portfolio management involves allocating funds across assets to generate uncorrelated returns while minimizing risk.

- Modern Portfolio Theory and Mean-Variance Optimization are widely used techniques, but recent advances in Machine Learning have enabled new DRL-based approaches.

- While DRL methods have shown promising results, they are often compared against simple baselines and do not consider traditional finance techniques like MVO.

Methodology

DRL Approach

- Formulate the portfolio optimization problem as a Markov Decision Process.

- Use a policy-gradient method (Proximal Policy Optimization) to train a DRL agent.

- Agent observes asset log returns and market volatility indicators, and outputs portfolio weights.

- Reward function is based on the Differential Sharpe Ratio to optimize for risk-adjusted returns.

MVO Approach

- Use a 60-day lookback period to estimate asset means and covariances.

- Apply the Ledoit-Wolf shrinkage operator to mitigate covariance estimation errors.

- Solve the Sharpe ratio maximization problem to obtain portfolio weights.

Experimental Setup

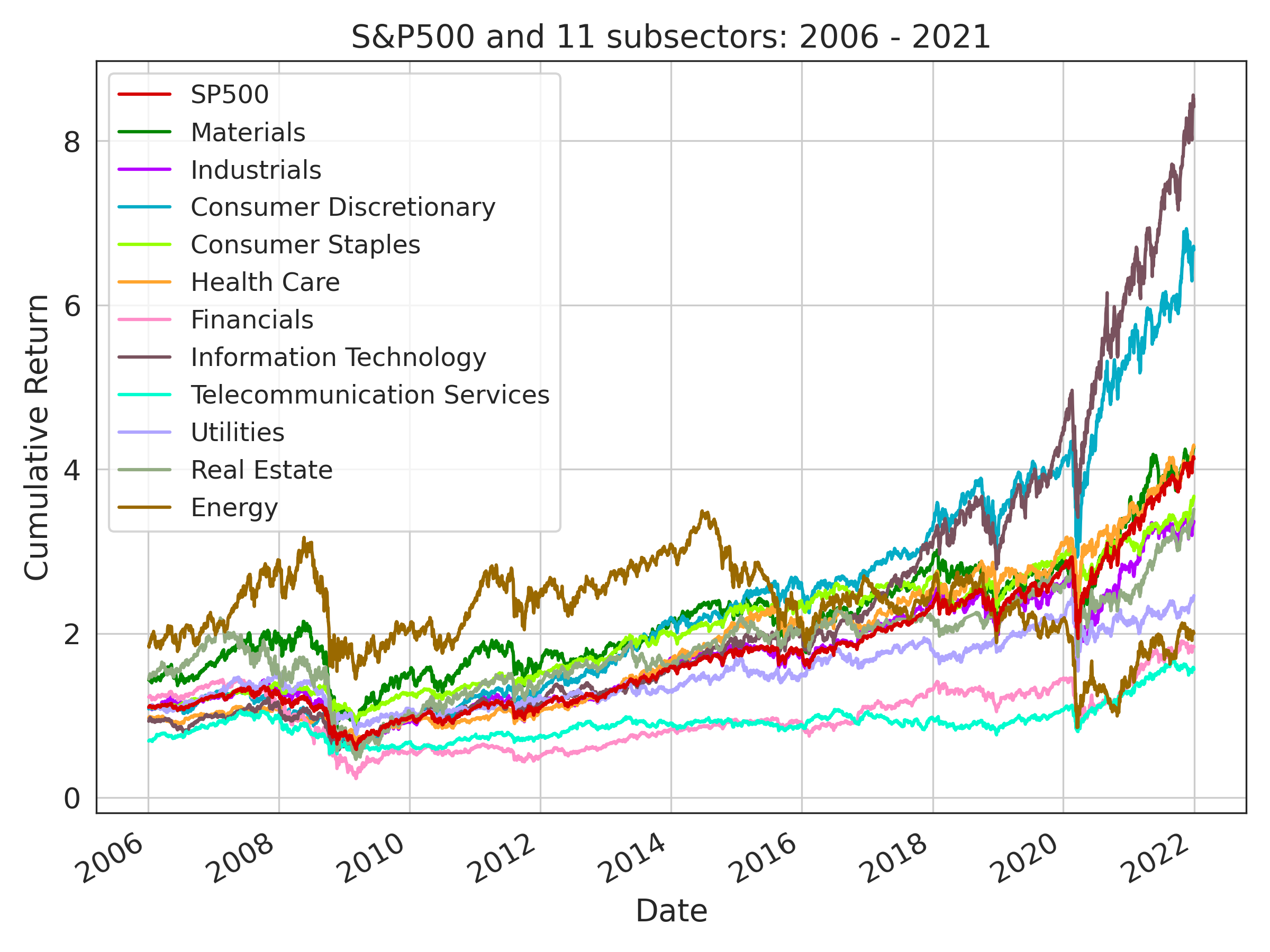

- Use daily adjusted close price data for S&P 500 sector indices, VIX, and S&P 500 from 2006-2021.

- Split data into 10 sliding window groups, with the first 5 years for training, 1 year for validation, and 1 year for testing.

- Train 5 DRL agents per group and select the best performer.

- Evaluate both DRL and MVO strategies through 10 independent backtests [2012-2021].

Results

- The DRL strategy outperforms MVO in terms of:

- Annual returns (12.11% vs. 6.53%)

- Sharpe ratio (1.17 vs. 0.68)

- Maximum drawdown (-32.96% vs. -33.03%)

- More consistent monthly and annual returns

- Lower portfolio turnover

Interpretation

- The DRL strategy's improved performance is likely due to its ability to learn complex, nonlinear relationships in the data and optimize for risk-adjusted returns.

- The DRL strategy also exhibits more stable and consistent returns compared to the MVO approach, which could translate to lower transaction costs in live deployment.

- Potential reasons for DRL's outperformance:

- DRL can capture nonlinear asset relationships that MVO may miss.

- DRL optimizes directly for risk-adjusted returns, while MVO may be suboptimal for this objective.

- DRL's policy-gradient training provides more robust generalization than MVO's point estimates.

Limitations & Uncertainties

- The study does not model transaction costs or slippage, which could impact the relative performance of the two approaches.

- The DRL framework is sensitive to hyperparameter tuning and the choice of neural network architecture.

- The comparison is limited to the US equities market and may not generalize to other asset classes or markets.

What Comes Next

- Incorporate transaction costs and slippage into the DRL environment.

- Explore adding a drawdown minimization component to the DRL reward function.

- Investigate regime-switching models that dynamically allocate between low-volatility and high-volatility DRL agents.

- Extend the comparison to international markets and other asset classes.