Story

Hybrid Federated and Split Learning for Privacy Preserving Clinical Prediction and Treatment Optimization

Key takeaway

A new machine learning technique allows hospitals to collaboratively develop medical prediction models without sharing sensitive patient data, helping improve healthcare while protecting privacy.

Quick Explainer

The proposed framework combines Federated Learning (FL) and Split Learning (SL) to enable privacy-preserving collaborative clinical decision support without centralized data pooling. In this hybrid approach, clients maintain a shared trunk model and transmit cut-layer activations to a coordinating server, which hosts the prediction heads. This partitioning of responsibilities allows clients to keep feature extraction local while still benefiting from a jointly learned model. The authors demonstrate how optional client-side adapters and activation defenses can be used to explore the privacy-utility trade-off, positioning the hybrid FL-SL framework as a practical design space for privacy-preserving healthcare decision support.

Deep Dive

Technical Deep Dive: Privacy-Preserving Hybrid Federated and Split Learning for Clinical Prediction and Treatment Optimization

Overview

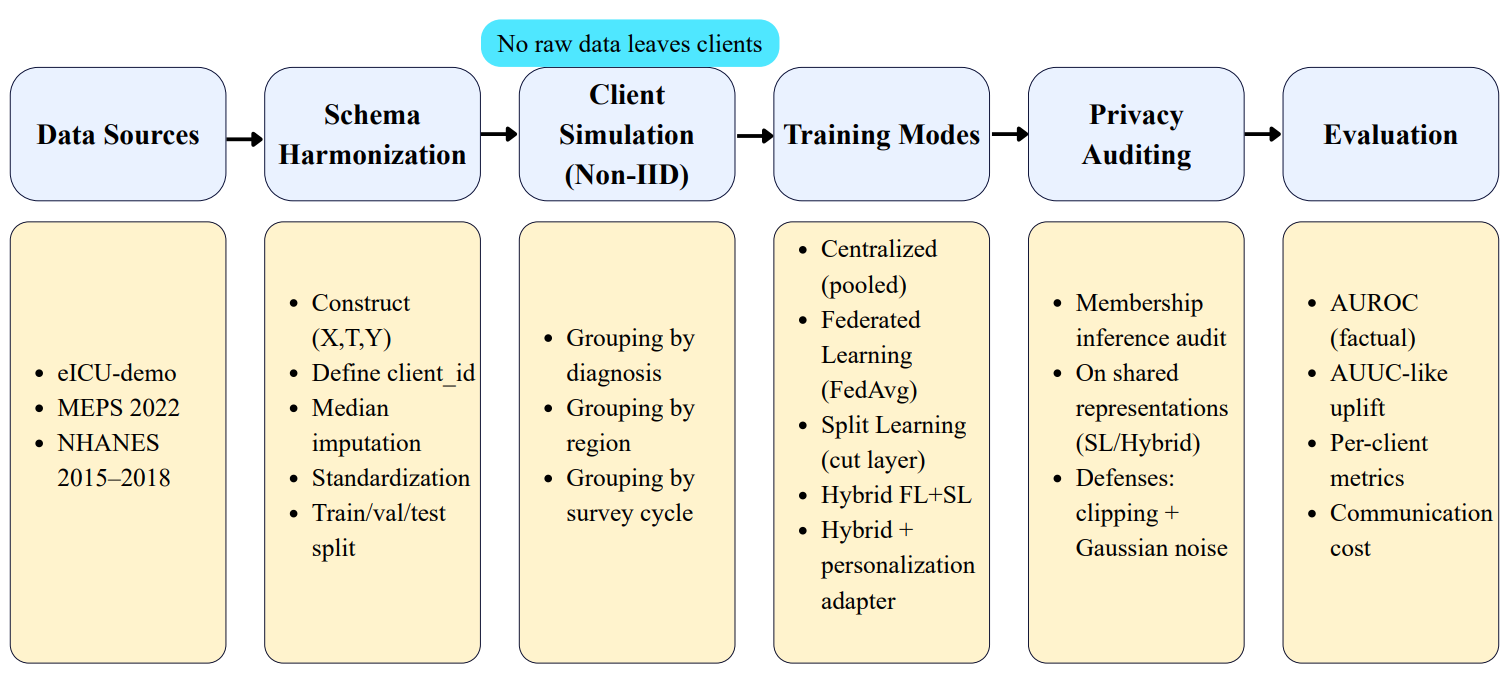

This work proposes a privacy-preserving hybrid framework that combines Federated Learning (FL) and Split Learning (SL) to enable collaborative clinical decision support without centralized pooling of patient data. The key contributions are:

- Developing a hybrid FL-SL protocol that keeps feature extraction on clients, places prediction heads on a coordinating server, and applies privacy controls at the collaboration boundary.

- Evaluating the framework across multiple public clinical datasets, assessing performance jointly on predictive utility, uplift-based treatment prioritization, audited privacy leakage, and deployment cost.

- Providing a unified view of the privacy-utility trade-off and demonstrating how lightweight defenses like activation clipping and noise can reduce audited leakage signals.

Methodology

The authors study a problem setting where clients (e.g., hospitals) hold patient records $(x, t, y)$ and cannot pool data centrally due to privacy constraints. The goal is to train models for clinical decision support, optimizing both predictive utility and uplift-based treatment prioritization under capacity constraints.

They compare four collaborative training modes:

- Centralized: An upper-bound reference with pooled data.

- Federated Learning (FL): Clients train local models and share parameter updates.

- Split Learning (SL): Clients compute and share activations at a cut layer, while the server hosts the prediction head.

- Hybrid FL-SL: Clients maintain a shared trunk model and transmit cut-layer activations, with server-side prediction heads.

The authors also evaluate optional client-side adapters and activation defenses (clipping, noise) to explore the privacy-utility trade-off.

Data & Experimental Setup

Three public clinical datasets are used, each harmonized into a common $(x, t, y)$ schema with a non-IID client partition:

eICU-demo: Mortality prediction with early vasopressor exposureMEPS 2022: Inpatient utilization prediction with statin exposureNHANES 2015-2018: Controlled cholesterol prediction with statin exposure

The authors evaluate predictive utility (AUROC), uplift-based treatment prioritization (AUUC), privacy leakage (membership inference AUC), and deployment cost (communication).

Results

Key findings across the datasets:

- Hybrid FL-SL variants achieve competitive predictive and decision utility compared to standalone FL or SL.

- For datasets with negative population-level uplift (eICU, MEPS), the hybrid approach provides consistent ranking signals under capacity constraints, outperforming random prioritization.

- Activation clipping and noise reduce the audited privacy leakage signal from cut-layer representations, demonstrating a tunable privacy-utility trade-off.

- Hybrid methods have higher communication costs than pure FL due to activation and gradient exchanges, but provide a clear collaboration boundary for governance and auditing.

- Reporting worst-client metrics highlights potential non-IID failures that can be hidden by averaging across clients.

Interpretation

- Hybrid FL-SL for Heterogeneous Decision Support: The hybrid approach is most useful when the objective prioritizes decision-facing utility (e.g., uplift-based treatment ranking) and clients are heterogeneous. It combines the parallelism of FL with the partitioning of SL, providing a clear boundary for privacy controls.

- Observational Uplift Interpretation: In datasets with negative population-level uplift under observational proxies, AUUC can be negative. The appropriate claim is consistent prioritization under a fixed protocol, not recovery of positive causal effects.

- Privacy as a Trade-off: Treating privacy as an empirical audit signal rather than a guarantee, the authors demonstrate how activation defenses can shift the privacy-utility profile to meet deployment needs.

- Deployment Cost and Robustness: Communication costs and worst-client metrics are crucial, as means can hide failures under non-IID partitions.

Overall, the work positions hybrid FL-SL as a practical design space for privacy-preserving healthcare decision support, where utility, leakage risk, and deployment constraints must be balanced explicitly.

Limitations & Uncertainties

- The study uses observational proxies for treatment, so CATE estimates may be biased.

- Membership inference audits provide an empirical leakage signal but do not offer formal privacy guarantees.

- The authors do not explore the full space of privacy defenses or attack strategies.

- Deployment costs and robustness are estimated, not measured in a real-world setting.

What Comes Next

Future work should:

- Validate the framework using ground-truth treatments and causal effect estimation.

- Explore a broader set of privacy defenses and leakage audits, including attacks on the shared trunk.

- Assess the framework's scalability, reliability, and integration with real-world clinical workflows.

- Investigate the impact of cut-layer placement, client-side adaptation, and other architectural controls on the privacy-utility trade-off.