Story

A Scalable Curiosity-Driven Game-Theoretic Framework for Long-Tail Multi-Label Learning in Data Mining

Key takeaway

Researchers developed a new machine learning approach that can better classify rare data types, which could improve real-world data analysis in fields like marketing and medical diagnosis.

Quick Explainer

The proposed framework reframes long-tail multi-label classification as a cooperative game among specialized "player" models. These players collaborate to maximize overall accuracy, while also receiving a curiosity-driven reward that encourages learning the rare "tail" labels. By dynamically adjusting this intrinsic exploration mechanism based on label frequency and inter-player disagreement, the approach can effectively tackle long-tail distributions without manual tuning. The key innovations are the game-theoretic formulation and the use of curiosity as an exploration signal, which enable the framework to outperform existing methods on both head and tail label performance.

Deep Dive

Technical Deep Dive: A Scalable Curiosity-Driven Game-Theoretic Framework for Long-Tail Multi-Label Learning in Data Mining

Overview

The proposed Curiosity-Driven Game-Theoretic Multi-Label Learning (CD-GTMLL) framework reframes the long-tail multi-label classification (MLC) problem as a cooperative multi-player game. Multiple specialized "players" (sub-predictors) collaborate to maximize overall accuracy while receiving a curiosity reward that scales with label rarity and inter-player disagreement. This intrinsic exploration mechanism dynamically steers learning toward tail labels without manual tuning.

Problem & Context

- Multi-label classification in real-world datasets exhibits a long-tail distribution, where a few "head" labels dominate while most "tail" labels are rarely observed.

- Existing methods like resampling, reweighting, or ensemble techniques have limitations in addressing this challenge at scale, especially as the label space grows to tens of thousands of labels.

Methodology

Long-Tail MLC Formulation

- The label set L is partitioned into head labels LH and tail labels LT based on label frequency.

- The framework decomposes the label space into N overlapping subsets assigned to cooperating "players" (sub-predictors).

- Players collaborate to maximize a shared global payoff (overall classification accuracy).

Game-Theoretic Formulation

- Each player i maximizes Ji(θi) = R(θ) + αCi(x; θ), where R is the global accuracy and Ci is a curiosity reward.

- The curiosity reward amplifies correct predictions on infrequent labels and leverages inter-player disagreement as an exploration signal.

- Theorem 1 proves the existence of a tail-aware Nash equilibrium, where no equilibrium can systematically ignore tail labels.

Learning Algorithm

- Players are updated sequentially via a cyclic best-response procedure, with each player optimizing its local objective.

- The computational complexity scales linearly with the label count L.

Data & Experimental Setup

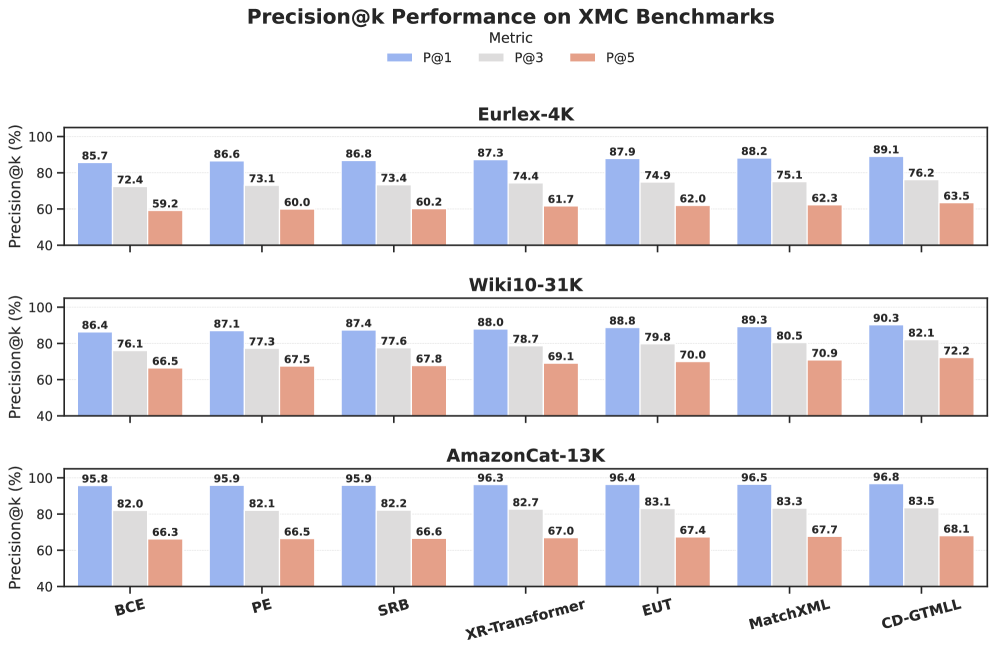

- Evaluated on standard MLC benchmarks (Pascal VOC, MS-COCO, Yeast, Mediamill) and extreme multi-label datasets (Eurlex-4K, Wiki10-31K, AmazonCat-13K).

- Compared to representative baselines covering resampling, reweighting, ensemble, and other state-of-the-art methods.

- Metrics include mAP, Micro-F1, Macro-F1, and the Rare-F1 score focused on tail labels.

Results

- CD-GTMLL consistently outperforms baselines on both head-centric and tail-centric metrics across all standard datasets.

- On Pascal VOC, it achieves 92.8% mAP and 79.4% Rare-F1, surpassing the next best by 0.9 and 1.2 percentage points, respectively.

- Under artificially intensified long-tail conditions, CD-GTMLL's advantage in Rare-F1 becomes more pronounced, e.g., 64.3% on Pascal VOC-R@50% vs. 62.9% for the runner-up.

Interpretation

- Ablation studies confirm the critical contributions of both the curiosity mechanism and the multi-player game structure.

- Analysis of the players' behavior reveals emergent specialization, with individual players becoming experts on head or tail labels.

- The training dynamics show that the rapid decrease in tail-label disagreement corresponds to the fastest gain in the overall system potential, validating the framework's efficiency.

- The computational overhead scales linearly with the number of players, representing a worthwhile trade-off for the substantial performance boost, especially on extreme-scale datasets.

Limitations & Uncertainties

- The theoretical analysis assumes certain conditions, such as the continuity and compactness of the parameter space and the tail-responsiveness of the performance metric.

- While the framework demonstrates strong empirical performance, its behavior and guarantees may differ on datasets or tasks that violate these assumptions.

- The practical implications of the framework's hyperparameters, such as the number of players and the curiosity weight, require further investigation across a broader range of applications.

What Comes Next

- Extending the framework to handle dynamic, evolving label spaces, where the tail distribution may shift over time.

- Exploring potential synergies between the game-theoretic exploration and other representation learning techniques, such as contrastive pre-training or meta-transfer learning.

- Investigating the applicability of the cooperative game formulation to other long-tail learning problems beyond multi-label classification.