Story

Is Mamba Reliable for Medical Imaging?

Key takeaway

Researchers evaluated a promising medical imaging technique called Mamba, finding it could offer fast, efficient processing - but its reliability under real-world conditions needs further study.

Quick Explainer

Mamba is a state-space model architecture adapted for computer vision tasks, including medical image classification. While Mamba-based models like MedMamba can achieve strong performance on standardized medical imaging benchmarks, the Deep Dive reveals significant vulnerabilities. These models exhibit substantial sensitivity to input-level perturbations, such as adversarial attacks, partial occlusions, and realistic image corruptions. Moreover, they show uneven robustness to hardware-inspired "bit-flip" faults, with early model components being more susceptible. These findings underscore the need for improved reliability mechanisms, including adversarial-aware training and fault-tolerant architectures, before deploying Mamba-based models in critical medical imaging applications.

Deep Dive

Technical Deep Dive: Is Mamba Reliable for Medical Imaging?

Overview

This work evaluates the reliability and security of Mamba, a state-space model architecture adapted for computer vision tasks, when applied to medical image classification. The key findings are:

- Mamba-based models like MedMamba achieve strong clean accuracy on MedMNIST datasets, a collection of standardized medical imaging benchmarks.

- However, the models show substantial vulnerability to input-level perturbations, including white-box adversarial attacks (FGSM, PGD), partial occlusions (PatchDrop), and realistic image corruptions (Gaussian noise, defocus blur).

- Under hardware-inspired "bit-flip" fault attacks, the models exhibit uneven robustness, with early feature extraction and state-space model components being more sensitive to faults. Even a single high-impact bit flip can collapse performance to near-random levels.

- The results highlight the need for improved reliability mechanisms, including adversarial/corruption-aware training and fault-tolerant architectures, before deploying Mamba-based models in critical medical imaging applications.

Problem & Context

AI is transforming medical imaging, with Mamba-based models gaining traction for tasks like classification, segmentation, and reconstruction. However, the reliability and security of these models under realistic software and hardware threats remains underexplored.

This work aims to evaluate the robustness of Mamba-based medical imaging models to:

- Input-level perturbations: White-box adversarial attacks, partial occlusions, and common corruptions

- Hardware-inspired faults: Random, targeted, and worst-case bit-flip attacks emulating hardware failures

The goal is to quantify vulnerabilities and provide insights for developing more robust Mamba-based medical imaging systems.

Methodology

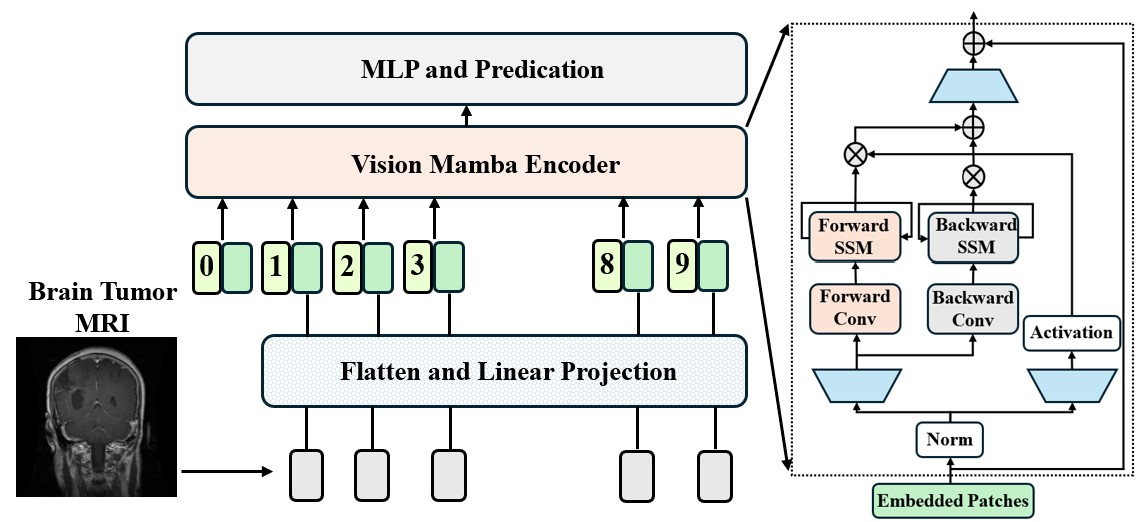

The authors adopt MedMamba as the base architecture and evaluate it on the MedMNIST dataset collection, which covers diverse medical imaging tasks and modalities.

Input-level Robustness Evaluation

- White-box Adversarial Attacks: FGSM and PGD attacks with ℓ∞ constraint

- Information Drop (PatchDrop): Random occlusion of image patches

- Information Corruption: Gaussian noise and defocus blur at varying intensity levels

Hardware-level Robustness Evaluation

- Bit-Flip Threats: Random, layer-wise, and worst-case bit flips in weights and activations

- Quantify impact on classification accuracy across MedMNIST datasets

Data & Experimental Setup

- Datasets: MedMNIST, a collection of standardized medical imaging benchmarks covering tasks like classification, segmentation, and reconstruction

- Model: MedMamba, a Mamba-based architecture adapted for medical imaging

- Training: Standard recipe with task-specific adjustments (optimizer, learning rate, regularization)

- Evaluation Metrics: Classification accuracy on held-out test sets

Results

Input-level Robustness

- White-box Attacks: MedMamba exhibits strong clean performance (e.g., 97.63% on BloodMNIST) but severe degradation under PGD, sometimes collapsing to near-random accuracy.

- PatchDrop: Performance gradually declines as more image patches are occluded, with some datasets like BloodMNIST showing sharp drops even at moderate occlusion levels.

- Corruptions: Both Gaussian noise and defocus blur degrade accuracy as corruption severity increases, confirming sensitivity to realistic distribution shifts.

Hardware-level Robustness

- Random Bit Flips: Increasing the number of randomly flipped bits (1 → 16) steadily reduces accuracy across datasets.

- Layer-wise Bit Flips: Early feature extraction and state-space model components are more vulnerable than later layers.

- Worst-Case Bit Flips: A single high-impact bit flip (e.g., in the exponent) can collapse performance to near-random levels.

Interpretation

The results indicate that while Mamba-based medical imaging models can achieve strong clean performance, they exhibit significant vulnerabilities to both input-level perturbations and hardware-inspired faults. Key insights:

- Adversarial attacks, partial occlusions, and common corruptions can severely degrade accuracy, highlighting the need for improved robustness.

- Hardware faults, even with a small bit-flip budget, can also significantly impair reliability, especially in early feature extraction and state-space model components.

- The extreme sensitivity to worst-case bit flips suggests that fault-aware deployment and lightweight error detection/correction mechanisms are critical.

These findings underscore the importance of comprehensive robustness evaluation and the development of more reliable Mamba-based medical imaging systems before real-world deployment.

Limitations & Uncertainties

- The study is limited to the MedMNIST dataset collection and may not generalize to higher-resolution clinical datasets or other medical imaging tasks.

- The hardware fault model is simplified and may not fully capture the complexity of real-world hardware failures.

- The work does not explore defense mechanisms or provide guidance on how to improve the robustness of Mamba-based models.

What Comes Next

Future research directions include:

- Evaluating Mamba-based models on larger, higher-resolution clinical datasets and a wider range of medical imaging tasks.

- Developing adversarial/corruption-aware training techniques to improve input-level robustness.

- Exploring fault-tolerant architectures and lightweight error detection/correction schemes for hardware-level reliability.

- Investigating the interplay between input-level and hardware-level threats, and designing comprehensive defense strategies.

Sources: