Story

Retrieval Augmented (Knowledge Graph), and Large Language Model-Driven Design Structure Matrix (DSM) Generation of Cyber-Physical Systems

Key takeaway

Researchers have found that advanced language models can help generate design structures for complex cyber-physical systems more efficiently, which could streamline engineering for products like smart home devices.

Quick Explainer

This study explores the potential of advanced language models, combined with graph-based retrieval and augmentation techniques, to address the challenge of generating Design Structure Matrices (DSMs) for complex cyber-physical systems. The core approach involves leveraging large language models to extract relevant information from a diverse corpus of reference materials, ranging from expert-level technical documents to informal project resources. This knowledge is then integrated using retrieval-augmented generation and graph-based techniques to automatically construct DSMs that capture the relationships between system components. The key novelty lies in the integration of these language-model-driven methods with existing product lifecycle management workflows, enabling more efficient and data-driven architectural analysis during the design phase of cyber-physical system development.

Deep Dive

Technical Deep Dive: Retrieval Augmented (Knowledge Graph), and Large Language Model-Driven Design Structure Matrix (DSM) Generation of Cyber-Physical Systems

Overview

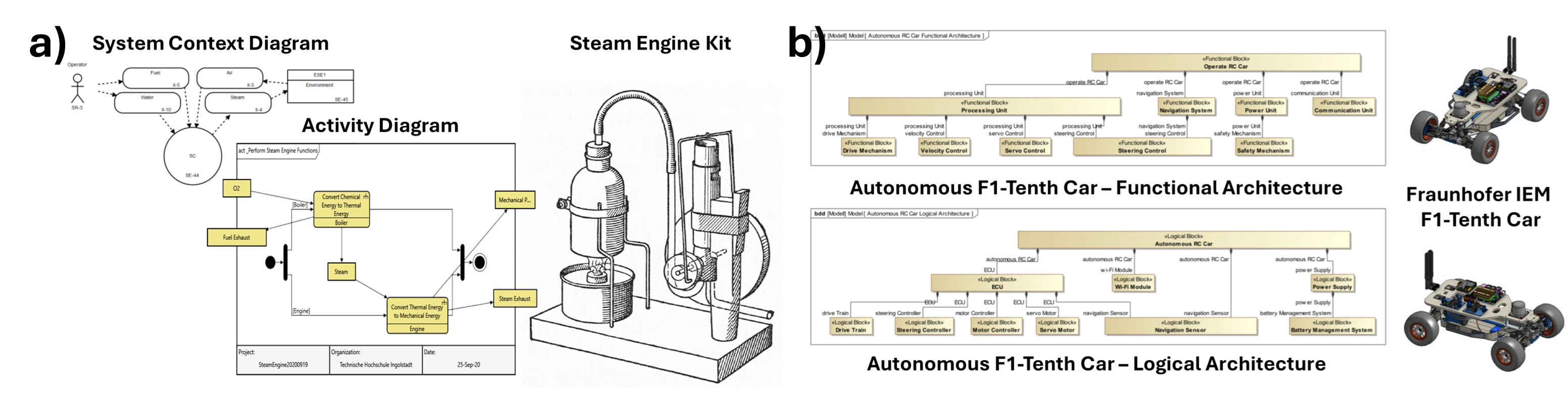

This study explores the potential of Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and Graph-based RAG (GraphRAG) for generating Design Structure Matrices (DSMs) for cyber-physical systems (CPS). The authors tested these methods on two distinct use cases—a power screwdriver and a CubeSat—evaluating their performance on two key tasks: determining relationships between predefined components, and the more complex challenge of identifying components and their subsequent relationships.

Problem & Context

- Modern CPS present significant challenges in architectural representation and analysis, particularly in generating effective architectural models during the design phase due to their inherent complexities.

- Existing approaches, such as graph-based methods and matrix-based Design Structure Matrices (DSMs), face practical limitations in terms of labor-intensive manual effort, lack of semantic understanding, and integration challenges with existing Product Lifecycle Management (PLM) workflows.

- The emergence of advanced language models and multi-modal capabilities offers promising new directions for addressing these challenges, particularly when combined with proven approaches for integrating PLM systems and maintaining traceability across the development life-cycle.

Methodology

The authors employed a three-step procedure:

- Preparation of the References and Design Configuration: Semi-automated extraction of information from academic search engines and crowdsourced project repositories to build a corpus of R1 (expert-level), R2 (formal/normative), and R3 (informal/novice) knowledge.

- xLM Preparation and Process: Utilized baseline LLMs, RAG, and GraphRAG approaches to generate DSMs for the power screwdriver and CubeSat use cases, with two scenarios: (i) determining relationships between predefined components, and (ii) identifying components and their relationships.

- Analysis and Visualization of the Results: Evaluated the performance using cell-level metrics (accuracy, precision, recall, F1-score) and global structural similarity metrics (edit distance, spectral distance).

Data & Experimental Setup

- The authors used well-documented architectural structures from the literature as ground truth:

- Power screwdriver: Detailed component-level interactions and physical connections

- CubeSat: Standardized modular architecture with documented system-level interfaces

- They systematically evaluated five models (gpt-4-turbo-preview, gpt-4-o, mixtral: 8x22b, llama3.3: 70b, deepseek-r1: 14b) across the three approaches (LLM, RAG, GraphRAG).

Results

- Determining Relationships between Predefined Components (i):

- For the power screwdriver's spatial reasoning, mixtral: 8x22b achieved the highest performance (F1-score of 0.849 ± 0.074).

- For the CubeSat's whole-part relationships, llama3.3: 70b showed the best performance (F1-score of 0.909 ± 0.000).

- RAG and GraphRAG approaches improved performance in specific configurations, but combining all references did not consistently yield improvements.

- Identifying Components and Their Relationships (ii):

- The best-performing models showed strong structural similarity to the ground truth, with llama3.3: 70b for the CubeSat case and mixtral: 8x22b for the power screwdriver case achieving notable edit distance and spectral distance metrics.

- Across the experiments, the alignment process (filtering with LLMs) improved the overall accuracies compared to the raw model responses.

- Baseline RAG's predictions had higher accuracies compared to baseline LLMs, especially for R1-R2, R2-R3, and R1-R2-R3 reference combinations.

Interpretation

- Model architecture often proved more critical than parameter count for relationship classification tasks.

- Prompt design significantly affected the outcomes, presenting considerations for broader application across diverse system types.

- The effectiveness of RAG approaches depended on reference document selection and classification, requiring a balance between automation and domain expertise.

- Computational requirements varied across approaches, with cloud-based models offering faster processing but at a higher cost, while local implementations required substantial hardware resources.

Limitations & Uncertainties

- The methodology was tested on two distinct use cases, providing valuable insights but highlighting the need for future validation across a broader range of CPS architectures, varying documentation quality, and diverse relationship types (e.g., functional, logical flows).

- Addressing prompt dependency is imperative for broader application, potentially through compiler-like frameworks that can automate the creation of self-improving pipelines for language model calls.

- Incorporating reasoning models and exploring a wider array of LLM architectures, including those fine-tuned for engineering tasks, could yield deeper insights into the factors driving performance variations.

What Comes Next

- Improved connections with Product Lifecycle Management interfaces, particularly focusing on physical viewpoints (e.g., geometric data representations and material aspects), could enhance the integration of these automated DSM generation techniques with detailed design processes.

- Structured agentic frameworks offer significant potential for enhanced performance (e.g., error correction) by combining LLMs, ALMs, and their derivatives with reasoning and programmatic mechanisms to generate more accurate system architectures with multi-level granularity.