Story

Fine-Tuning LLMs to Generate Economical and Reliable Actions for the Power Grid

Key takeaway

Researchers used machine learning to help power grid operators quickly find ways to reduce power outages when the grid needs to change rapidly, potentially saving people from disruptive blackouts.

Quick Explainer

The key idea is to fine-tune a large language model to generate economical and reliable switching actions for the power grid during Public Safety Power Shutoff (PSPS) events. The approach involves a multi-stage pipeline: 1) Formulating the PSPS-aware switching problem as a DC-OPF MILP optimization to serve as the ground-truth oracle, 2) Designing a structured scenario representation and constrained action grammar to enable the language model to output verifiable switching plans, and 3) Introducing a voltage-aware preference refinement stage based on direct preference optimization (DPO) to better align the model's policy with AC voltage quality. This pipeline aims to produce verifiable, operator-facing language model assistants that interface with existing grid analysis tools, rather than replacing them.

Deep Dive

Technical Deep Dive: Fine-Tuning LLMs for Power Grid Optimization

Overview

This work presents a multi-stage adaptation pipeline that fine-tunes a large language model (LLM) to generate economical and reliable corrective switching actions for the power grid during Public Safety Power Shutoff (PSPS) events. The key contributions are:

- Formulating the PSPS-aware open-only switching problem as a DC-OPF MILP optimization, which serves as the ground-truth oracle.

- Designing a structured scenario representation and constrained action grammar to enable the LLM to output verifiable switching plans.

- Introducing a voltage-aware preference refinement stage based on direct preference optimization (DPO) to better align the LLM policy with AC voltage quality.

- Evaluating the fine-tuned LLM policies on IEEE 118-bus PSPS scenarios, showing improved economic performance, reduced AC infeasibility, and better voltage regulation compared to a zero-shot baseline and a neural network alternative.

Problem & Context

- Public Safety Power Shutoffs (PSPS) are preventive and corrective de-energization actions taken by utilities to reduce wildfire ignition risk during extreme weather conditions.

- When a PSPS event forces a subset of transmission lines out of service, system operators must determine whether additional corrective open-only switching actions can improve reliability and reduce load shedding.

- The authors formulate this as a DC-OPF MILP problem that explicitly incorporates PSPS constraints and poses an open-only decision-making problem with a limited switching budget.

Methodology

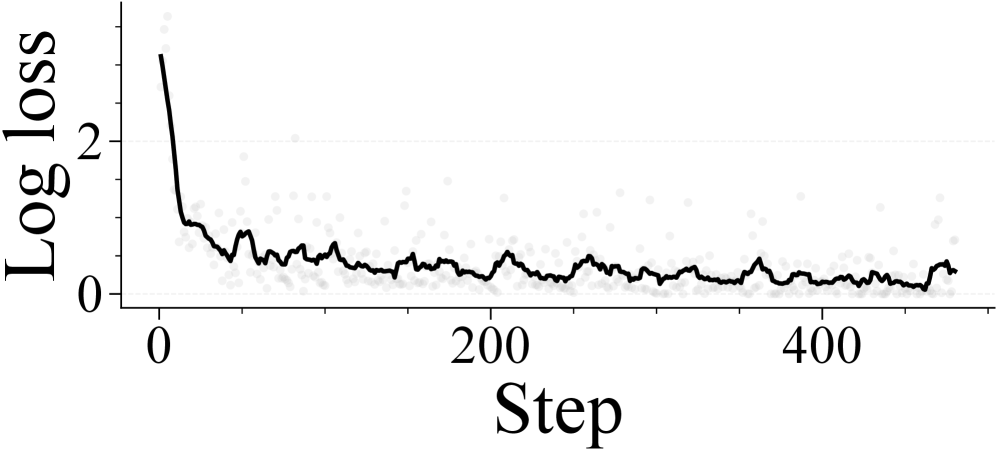

Supervised Fine-Tuning (SFT)

- The authors use the DC-OPF MILP as an offline oracle to generate labeled switching plans for supervised adaptation of an instruction-tuned LLM.

- The model is trained to map structured scenario summaries (case dimensions, PSPS-forced opens, corridor breakdown) to a constrained action grammar (open(Sk: LINE), open(LINE), do_nothing).

- The SFT objective is the standard conditional language-modeling loss over the canonical action strings.

Voltage-Aware Preference Refinement (DPO)

- To better align the policy with AC voltage quality, the authors introduce a refinement stage based on direct preference optimization (DPO).

- A voltage-penalty metric

V_penis defined to evaluate AC voltage violations, and preference pairs (y^+,y^-) are constructed by sampling candidates fromπ_SFTand ranking byV_pen. - The DPO objective encourages the refined policy

π_DPOto assign higher likelihood to preferred low-penalty plans while anchored to the SFT reference.

Best-of-N Inference

- Even after SFT and DPO, a single stochastic decode can produce a suboptimal or malformed plan.

- The authors use best-of-N inference to sample multiple candidates and select the best under a task-specific metric (DC objective

J_DCor AC voltage penaltyV_pen).

Data & Experimental Setup

- Experiments use the IEEE 118-bus test system, with 9 transmission corridors defined by grouping geographically proximate lines.

- For SFT, 200 PSPS scenarios are split between training and testing.

- For DPO, 440 preference pairs are constructed by sampling from

π_SFTand ranking byV_pen. - The authors adapt an instruction-tuned LLM,

ft: gpt-4.1-mini-2025-04-14, via the OpenAI fine-tuning API.

Results

DC Objective

- Both SFT and DPO policies shift the distribution of the DC objective

J_DCdownward compared to the zero-shot baseline, indicating improved economic performance. - The SFT and DPO medians are close, showing the voltage-aware preference refinement preserves DC performance.

- The neural network baseline achieves low median

J_DCbut exhibits wider dispersion and heavier upper tail.

AC Feasibility and Voltage Quality

- The zero-shot baseline fails AC power flow in 50% of scenarios, while SFT and DPO reduce failures to a small fraction.

- On the common-success set (scenarios where all policies converged), DPO achieves lower median voltage penalty

V_penthan SFT and the neural network baseline, indicating the preference refinement improves voltage regulation. - However, the upper tail of

V_penpersists across policies, suggesting some scenarios remain challenging for voltage control.

Interpretation

- The fine-tuned LLM policies significantly outperform the zero-shot baseline in both economic objective and AC feasibility, demonstrating the value of the multi-stage adaptation pipeline.

- The voltage-aware preference refinement further improves voltage regulation compared to pure DC imitation, though some challenging scenarios remain.

- The authors view this as a step toward verifiable, operator-facing LLM assistants that interface with existing grid analysis pipelines rather than replacing them.

Limitations & Uncertainties

- The authors note that while the pipeline is backend-agnostic, it was evaluated only on the IEEE 118-bus system and a limited set of PSPS scenarios.

- The preference pair construction uses offline AC power flow evaluation, which may not be practical in real-time deployment. More efficient online voltage assessment methods could be explored.

- The DPO refinement stage injects voltage-awareness, but the authors acknowledge the upper tail of voltage penalties persists, suggesting the need for richer preference data or alternative refinement approaches.

What Comes Next

- The authors suggest extending the pipeline to support multi-task fine-tuning, enabling a single foundation model to handle diverse power grid applications under a unified, verifiable decision framework.

- Investigating more efficient online voltage evaluation methods to enable real-time preference refinement could improve the scalability and applicability of the approach.

- Exploring alternative preference learning techniques beyond DPO, as well as incorporating a wider range of voltage-critical scenarios into the training data, may further enhance the model's ability to reliably optimize both economic and voltage-related objectives.