Story

Federated Latent Space Alignment for Multi-user Semantic Communications

Key takeaway

Researchers developed a way for AI devices to better understand each other by aligning their internal representations of language and concepts, which could improve communication and collaboration between intelligent systems.

Quick Explainer

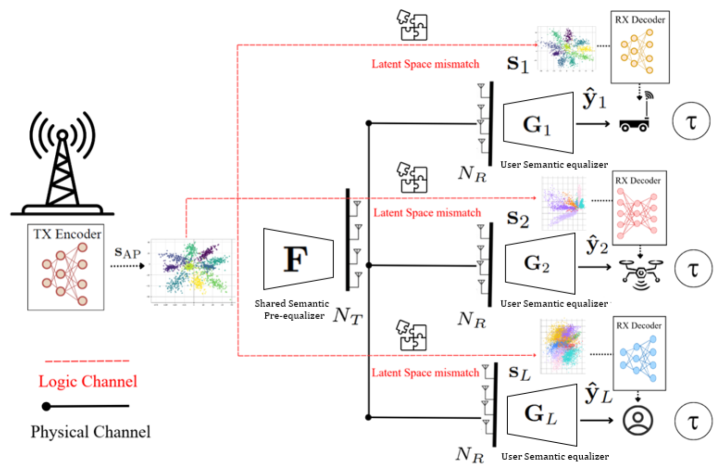

The paper proposes a federated approach to align the latent space representations of multiple AI-powered users communicating with a shared access point. By training a shared semantic pre-equalizer at the access point and personalized equalizers on user devices, the method enables effective semantic compression and mutual understanding, even when users have diverse neural network models. This decentralized, privacy-preserving framework addresses the key challenge of latent space misalignment in multi-user semantic communications, a critical issue for practical AI-native applications with heterogeneous agents. The key novelty is the use of a federated optimization technique to jointly learn the shared and personalized equalizers, facilitating efficient semantic compression and task-oriented communication under real-world constraints.

Deep Dive

Technical Deep Dive: Federated Latent Space Alignment for Multi-user Semantic Communications

Overview

This paper introduces a novel approach for mitigating latent space misalignment in multi-agent AI-native semantic communications. The goal is to enable effective, task-oriented communication between an access point (AP) and multiple users with heterogeneous neural network models. The proposed method implements a protocol featuring a shared semantic pre-equalizer at the AP and local semantic equalizers at user devices, facilitating mutual understanding and semantic compression while considering power and complexity constraints.

Problem & Context

- Traditional communication systems focus on accurate bit/symbol transmission, but cannot efficiently meet growing data demands of time-critical AI applications.

- Semantic communication represents raw data in a compressed, task-relevant form to reduce bandwidth and latency.

- AI-powered semantic communication relies on deep neural networks (DNNs) to embed raw data into low-dimensional latent representations for transmission.

- However, devices may independently encode the same information into different latent spaces, leading to semantic mismatches that impair mutual understanding between agents.

- This challenge, known as semantic channel equalization, is particularly prevalent in multi-vendor environments where parties cannot share proprietary models or data.

Methodology

- The paper casts the latent space alignment problem as a block-convex optimization, which is numerically solved using a federated alternating direction method of multipliers (ADMM) framework.

- This enables decentralized training of both the shared semantic pre-equalizer at the AP and the local semantic equalizers at user devices.

- A message exchange protocol is introduced to reduce communication overhead and latency, while preserving the privacy of users' latent space representations.

Data & Experimental Setup

- The evaluation considers a multi-user downlink scenario with an AP communicating with a set of AI-native users, each equipped with its own DNN model.

- Two main configurations are examined:

- Homogeneous setup: Four users with semantically similar latent space encoders

- Heterogeneous setup: Four users split into two groups with significantly different latent space structures

Results

- The proposed federated latent space alignment approach outperforms baseline methods that perform semantic alignment and MIMO channel equalization separately.

- In the homogeneous setup, the method achieves significantly lower network MSE between the AP and users' latent spaces, along with more stable and higher task accuracy compared to the heterogeneous case.

- Reducing the percentage of semantic pilots (used for training) can facilitate model alignment and enhance overall task accuracy, but only down to a certain threshold.

Interpretation

- The federated ADMM-based alignment effectively addresses the challenge of diverse neural representations in multi-user semantic communications.

- The shared semantic pre-equalizer at the AP, combined with personalized equalizers on user devices, enables mutual understanding and semantic compression under practical constraints.

- Semantic similarity between user latent spaces is a key factor in alignment performance, highlighting the importance of user grouping and compatible model selection.

- There is an interesting trade-off between the number/selection of semantic pilots and overall system performance that warrants further exploration.

Limitations & Uncertainties

- The evaluation focuses on a downlink scenario and a specific image classification task, so the generalizability to other multi-user settings and applications is unclear.

- The paper does not address interference-aware alignment or dynamic user grouping based on latent space compatibility, which are identified as important future research directions.

- The impact of factors like channel conditions, power constraints, and computational complexity on the proposed approach is not extensively analyzed.

What Comes Next

- Explore optimal semantic pilot selection and data-driven semantic clustering to enhance alignment performance.

- Address interference-aware alignment and dynamic user grouping based on latent space compatibility for efficient multi-user semantic communication.

- Investigate the impact of practical system constraints (e.g., channel conditions, power limits, computational complexity) on the proposed federated alignment framework.