Story

Learning with Boolean threshold functions

Key takeaway

Researchers developed a method to train neural networks on data with Boolean (true/false) values, resulting in simpler models with weights of only +1 or -1. This could lead to more efficient AI algorithms for real-world applications.

Quick Explainer

The key innovation in this work is the use of Boolean threshold functions (BTFs) as the neuron nonlinearity, where the output is strictly ±1 rather than a continuous sigmoid. This allows the model to learn sparse, interpretable representations implementing simple logic gates. The training objective is formulated as a feasibility problem with constraints on the BTF margins and weights, rather than a loss function. This constrained optimization is solved using a reflect-reflect-relax (RRR) algorithm that performs efficient projections to the constraint sets. Compared to gradient-based methods, this approach demonstrates strong generalization on synthetic Boolean data, even with limited training data, by discovering the underlying logical structure of the target functions.

Deep Dive

Technical Deep Dive: Learning with Boolean Threshold Functions

Overview

This work explores an alternative approach to neural network optimization based on constraint satisfaction rather than loss minimization. The key innovations are:

- Using Boolean threshold functions (BTFs) as the neuron nonlinearity, where the output is strictly ±1 rather than a continuous sigmoid.

- Formulating the training objective as a feasibility problem with constraints on the BTF margins and weights, rather than a loss function.

- Solving the constrained optimization using a reflect-reflect-relax (RRR) algorithm that performs projections to the constraint sets.

This approach has several advantages over standard gradient-based methods:

- It can learn sparse, interpretable representations where the BTF weights are ±1, implementing simple logic gates.

- It demonstrates strong generalization on synthetic Boolean data, even when the training set is smaller than the information-theoretic minimum.

- It can handle discrete, non-differentiable data and constraints, unlike gradient-based methods.

Methodology

Boolean Threshold Functions (BTFs)

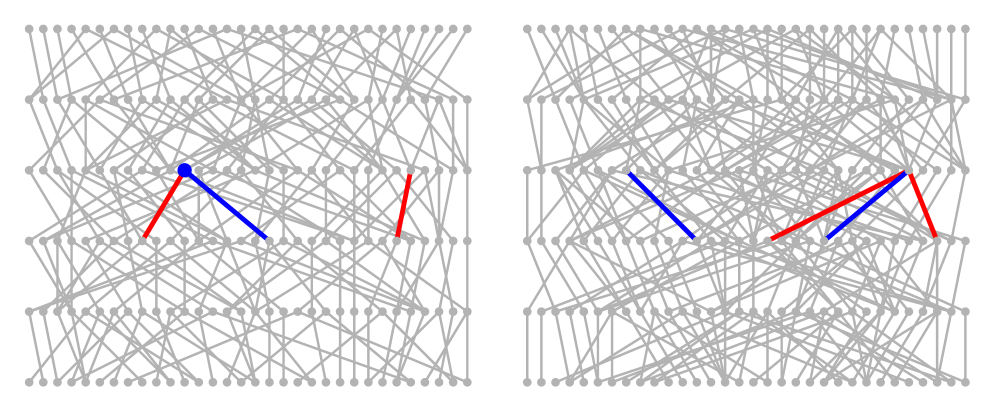

- A BTF is a function f: {-1, 1}^m → {-1, 1} that can be represented by a weight vector w ∈ ℝ^m: f_w(x) = sgn(w ⋅ x)

- BTFs have a margin constraint |w ⋅ x| ≥ μm, where μm = √(m/σ) and σ is a hyperparameter controlling the BTF sparsity.

- When σ is an odd integer, the only way to satisfy the margin constraint is for the BTF to have exactly σ nonzero, equal-magnitude weights.

Divide-and-Concur Formulation

- The training problem is cast as a feasibility problem: find z = (w, x, y) such that z ∈ A and z ∈ B

- A is the set of BTF constraints (one per neuron)

- B is the set of architectural constraints (e.g. equality of neuron outputs and inputs)

- The projections to A and B can be computed efficiently in a distributed fashion.

Reflect-Reflect-Relax (RRR) Optimization

- RRR is a constraint satisfaction algorithm that alternates projections to A and B.

- It is able to escape local infeasibility by taking steps in the direction of the "gap" between the two projections.

- RRR is able to solve the training problem without requiring small training batches or stochasticity, unlike gradient-based methods.

Data & Experimental Setup

The authors evaluate their method on several Boolean data tasks:

- Multiplier circuits: Learning to implement 2-bit and 3-bit integer multiplication from the full truth table.

- Binary autoencoding: Learning to encode and decode binary codes of varying lengths.

- MNIST 4 generation: Learning to generate the MNIST 4 digit from a 16-bit code.

- MNIST classification: Learning to classify the MNIST digits using a single-layer perceptron.

- Logic circuit learning: Learning to implement random Boolean circuits composed of simple logic gates.

- Cellular automata: Learning to implement the rule-30 cellular automaton.

For each task, the authors compare the performance of their RRR-based BTF networks to gradient-based baselines using standard multilayer perceptrons.

Results

The key results are:

- On the multiplier circuit task, the BTF networks are able to learn the full truth table with 100% accuracy given sufficient training data, while the gradient baselines struggle.

- On the binary autoencoding task, the BTF networks learn true binary codes, unlike the gradient baselines which produce non-binary codes.

- On the MNIST 4 generation task, the BTF networks learn a set of 16 "archetype" 4 digits that cover the training distribution.

- On the MNIST classification task, the BTF networks match or exceed the performance of the gradient baselines, especially when the margin constraint is tightened.

- On the logic circuit and cellular automata tasks, the BTF networks are able to learn the target functions from much smaller training sets than the gradient baselines.

Interpretation

- The BTF networks' ability to learn sparse, ±1 weight representations allows them to discover the underlying logical structure of the target functions, even when the training data is limited.

- The margin constraint and weight normalization in the BTF framework bias the networks towards simple, interpretable logic gate implementations.

- The RRR optimization is able to escape local infeasibility and find global solutions, unlike gradient-based methods which can get stuck in poor local minima.

Limitations & Uncertainties

- The authors do not provide a theoretical guarantee that RRR will converge to a solution, only empirical evidence of its effectiveness.

- The choice of hyperparameters, especially the sparsity hyperparameter σ, can significantly impact the performance and the interpretability of the learned representations.

- The method has only been demonstrated on synthetic, Boolean-structured data and its performance on more realistic, noisy data is unclear.

What Comes Next

The authors outline several directions for future work:

- Extending the BTF framework to handle weight sharing, non-uniform margins, and batched training.

- Developing distributed and parallelized implementations of the RRR algorithm.

- Creating Python/ML-friendly interfaces and model interpretability tools for BTF networks.

- Exploring the application of BTF networks to tasks like word embeddings and language modeling, where a Boolean representation may be more natural.

- Advancing the theoretical understanding of the BTF framework and the RRR algorithm, including establishing convergence guarantees.