Story

Oversmoothing, Oversquashing, Heterophily, Long-Range, and more: Demystifying Common Beliefs in Graph Machine Learning

Key takeaway

Researchers demystified common beliefs in graph machine learning, like oversmoothing and long-range connections, which could lead to more robust and interpretable AI models for real-world applications.

Quick Explainer

This technical deep dive examines common misconceptions in graph machine learning. It shows that oversmoothing is not an inevitable consequence of message passing, and that low accuracy cannot be attributed to oversmoothing alone. The paper also clarifies the relationship between homophily, heterophily, and long-range dependencies - demonstrating that heterophilic graphs can enable perfect classification, and that long-range problems stem from computational bottlenecks, not just topological ones. Additionally, it distinguishes between topological and computational bottlenecks, showing they are intertwined but not equivalent. By demystifying these concepts, the authors aim to guide more targeted research in this rapidly evolving field of graph machine learning.

Deep Dive

Technical Deep Dive: Oversmoothing, Oversquashing, Heterophily, and Long-Range Dependencies in Graph Machine Learning

Overview

This technical deep dive examines common beliefs and misunderstandings around key concepts in graph machine learning, including oversmoothing, oversquashing, homophily-heterophily, and long-range dependencies. The paper provides clear conceptual distinctions, simple counterexamples, and guidance on more precise research directions in this rapidly evolving field.

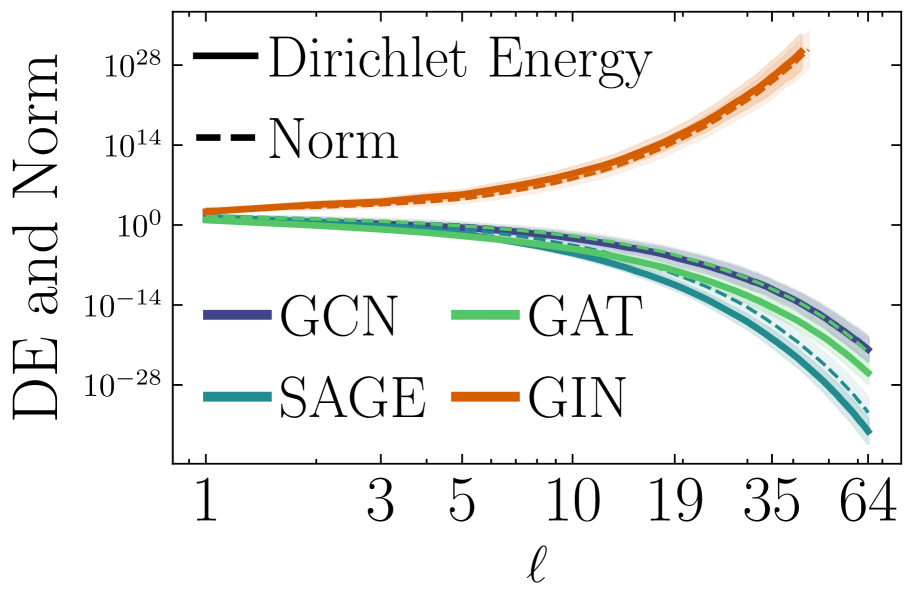

Oversmoothing (OSM)

Belief: OSM is a Property of All DGNs

- Theoretical analyses have suggested OSM is an inevitable consequence of message passing, but later work has shown this is not universally true

- OSM depends on architectural choices and training dynamics, not just the message-passing mechanism itself

- Remedies like skip connections, normalization, and gating can maintain local distinctions and prevent OSM

- OSM is neither universally observed nor straightforward to diagnose - it depends heavily on the specific architecture and metric used to measure it

Belief: OSM is the Cause of Performance Degradation

- Low accuracy in DGNs cannot be attributed to OSM alone

- The separability of node embeddings, rather than raw smoothness, is the key factor

- "Beneficial smoothing" can occur where within-class distances contract more than between-class ones, improving class separability despite global embedding collapse

Homophily, Heterophily, and Long-Range Dependencies

Belief: Homophily is Good, Heterophily is Bad

- There exist counterexamples of heterophilic graphs where DGNs can achieve perfect classification

- Homophilic graphs can also pose challenges if the task depends on long-range information that message passing fails to capture

Belief: Different Classes Imply Different Features

- This assumption ignores the role of the graph topology in the task definition

- If features are strongly predictive, a simple MLP may suffice, reducing the need for DGNs

Belief: Long-Range Propagation is Evaluated on Heterophilic Graphs

- The relationship between long-range tasks and graph heterophily is not straightforward

- Long-range problems are better understood as a form of information attenuation caused by computational bottlenecks, which can be exacerbated by but do not require topological bottlenecks

Oversquashing (OSQ)

Belief: Oversquashing as Synonym of Topological Bottleneck

- Topological bottlenecks and computational bottlenecks are distinct concepts that should be treated separately

- Low sensitivity does not necessarily imply the presence of topological bottlenecks

- Computational bottlenecks can arise independently of topological ones, and vice versa

Belief: Oversquashing as Synonym of Computational Bottleneck

- Computational and topological bottlenecks are intertwined but not equivalent

- Excessive pruning of the computational tree may harm performance on graphs with topological bottlenecks but mild computational ones

Belief: Oversquashing is Problematic for Long-Range Tasks as well

- Computational bottlenecks can arise even in short-range tasks, not just long-range ones

- Long-range tasks "force" classical message passing to create computational bottlenecks, but the two are not synonymous

Belief: Topological Bottlenecks Associated with Long-Range Problems

- Topological bottlenecks are not the sole cause of long-range problems

- Long-range issues stem from computational bottlenecks in message passing, which can be exacerbated but not necessarily caused by topological bottlenecks

Conclusions

- The rapid progress in graph machine learning has led to the consolidation of commonly accepted beliefs that are not always true

- This paper aimed to make such beliefs explicit and provide counterexamples to demystify them

- By clarifying concepts around oversmoothing, oversquashing, homophily-heterophily, and long-range dependencies, the authors hope to foster more targeted research in the field