Story

From Subtle to Significant: Prompt-Driven Self-Improving Optimization in Test-Time Graph OOD Detection

Key takeaway

Researchers developed a way to detect when a graph model is being used on data it wasn't trained on, helping ensure the reliability of graph AI systems in real-world applications.

Quick Explainer

SIGOOD is a self-improving framework for detecting out-of-distribution (OOD) graphs in graph neural networks. It works by constructing a prompt-enhanced graph that amplifies OOD signals, analyzing the energy variations between the original and prompt-enhanced graphs to identify OOD patterns, and iteratively optimizing the prompt generator to enhance the distinguishability of OOD signals. This energy-based self-improving mechanism is the key novel aspect, allowing SIGOOD to progressively strengthen its OOD detection capabilities without access to training data or labels.

Deep Dive

Technical Deep Dive: From Subtle to Significant: Prompt-Driven Self-Improving Optimization in Test-Time Graph OOD Detection

Overview

This work proposes a self-improving framework called SIGOOD for test-time graph out-of-distribution (OOD) detection. SIGOOD leverages an energy-based optimization strategy to iteratively enhance the detection of OOD signals in graph data without access to training data or labels.

Problem & Context

Graph neural networks (GNNs) have become a powerful paradigm for graph representation learning. However, when deployed in open-world scenarios, GNNs often encounter OOD graphs, leading to unreliable predictions. Detecting such OOD graphs is crucial for ensuring the reliability of GNNs.

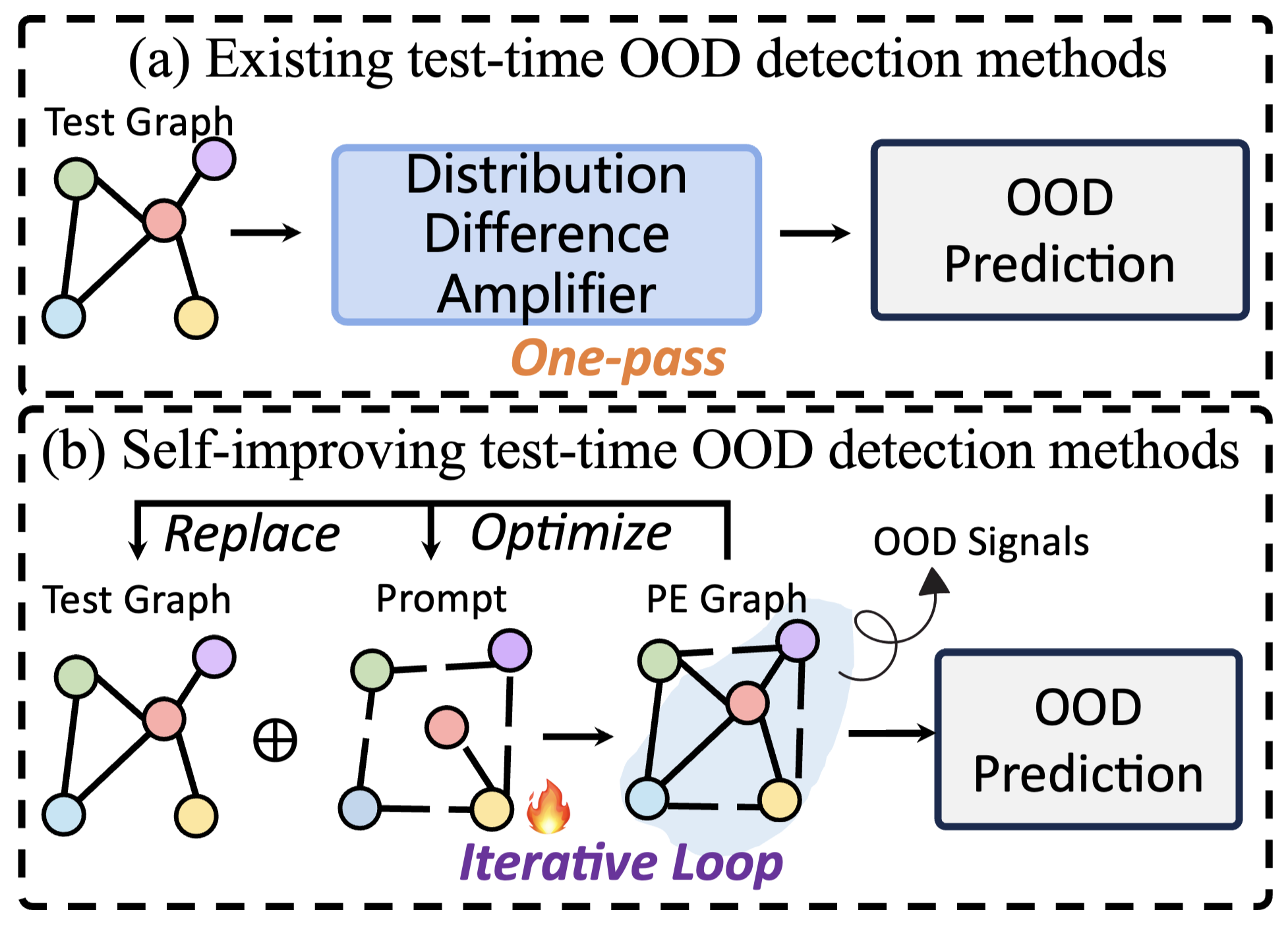

Recent work has focused on test-time graph OOD detection, which aims to identify distribution shifts using only the trained GNN and test graphs. However, these methods are limited in their ability to effectively amplify the difference between in-distribution (ID) and OOD signals.

Methodology

SIGOOD addresses this by introducing a self-improving optimization framework:

- Prompt Generation: A lightweight prompt generator is used to construct a prompt-enhanced graph that amplifies potential OOD signals.

- OOD Signal Recognition: SIGOOD analyzes the node-wise energy variations between the original test graph and the prompt-enhanced graph to identify OOD signals.

- Feedback Optimization: An energy preference optimization (EPO) loss is introduced to guide the prompt generator towards enhancing the distinguishability of OOD signals.

- Iterative Refinement: The refined prompt-enhanced graph is used as the new input for the next iteration, forming a self-improving loop that progressively strengthens the OOD detection performance.

Data & Experimental Setup

The authors evaluate SIGOOD on 21 real-world datasets from diverse domains, including drug chemical formulas, protein structures, and social networks. They compare SIGOOD against 12 state-of-the-art OOD and anomaly detection methods.

Results

SIGOOD consistently outperforms all baselines on both graph OOD detection and anomaly detection tasks:

- For OOD detection, SIGOOD achieves the best average AUC score across 8 ID/OOD dataset pairs, with improvements of up to 14.20% over the previous best method.

- For anomaly detection, SIGOOD ranks first on 11 out of 13 benchmark datasets and achieves the best average rank among 8 competing methods.

Interpretation

SIGOOD's effectiveness stems from its ability to iteratively refine the OOD signals through energy-based optimization. By introducing prompts to amplify OOD patterns and leveraging energy variations as a feedback signal, SIGOOD progressively enhances its capability to distinguish ID and OOD graphs.

Limitations & Uncertainties

The paper does not discuss any major limitations of the SIGOOD framework. The authors note that while SIGOOD does not achieve the top score on a few datasets, these deviations are likely due to dataset-specific variance rather than fundamental limitations of the approach.

What Comes Next

The authors suggest that the energy-based self-improving mechanism in SIGOOD could be further extended to other graph-related tasks, such as node classification and link prediction, to improve robustness against distribution shifts.