Story

Knowing Isn't Understanding: Re-grounding Generative Proactivity with Epistemic and Behavioral Insight

Key takeaway

Generative AI can't understand people the way humans do. This means AI assistants may struggle to fully address user needs they haven't anticipated.

Quick Explainer

The core idea is that current proactive AI systems overemphasize action selection, neglecting the underlying epistemic conditions that warrant intervention. The paper proposes "epistemic-behavioral coupling" - a framework that links the agent's understanding (epistemic legitimacy) to the degree of behavioral commitment. This allows regulating autonomy based not just on anticipated outcomes, but on the agent's justifiable claims about what it knows. The framework highlights failure modes like overconfident action, denial of epistemic discomfort, and suppression of signals that could enable discovery. The key innovation is shifting the primary control variable from maximizing initiative to calibrating intervention based on real-time assessment of understanding.

Deep Dive

Technical Deep Dive: Knowing Isn't Understanding

Overview

This technical deep dive summarizes the key insights from the preprint "Knowing Isn't Understanding: Re-grounding Generative Proactivity with Epistemic and Behavioral Insight". The paper argues that contemporary approaches to proactive AI systems are limited by an overemphasis on action selection, failing to model the underlying epistemic conditions that warrant intervention. It proposes a new framework of "epistemic-behavioral coupling" to characterize when proactive action is justified.

Limitations of Existing Proactivity Approaches

- Current proactive systems equate understanding with resolving explicit queries, ignoring cases where users lack awareness of what they are missing or what is worth considering.

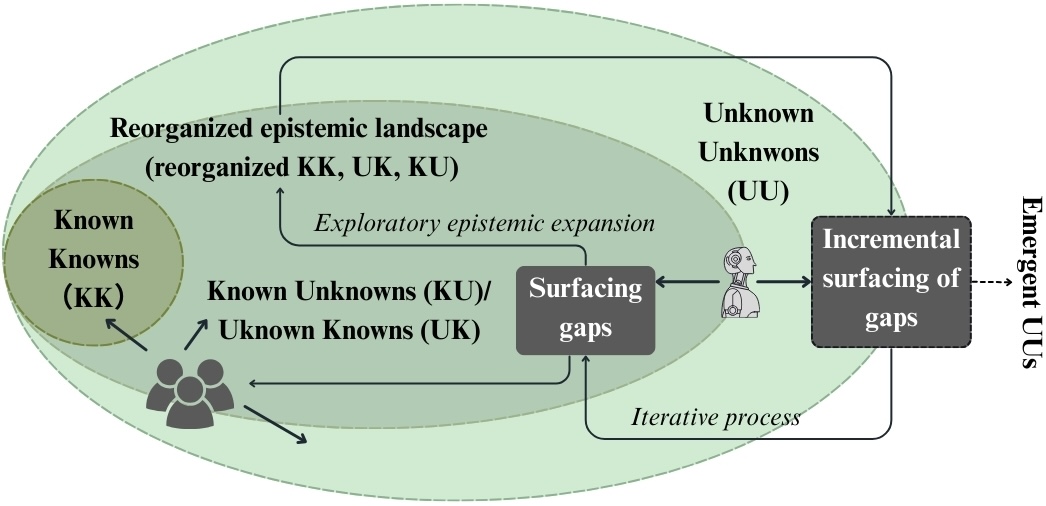

- Proactivity is typically framed as improved anticipation and efficiency, assuming user goals and uncertainties are already representable. This fails to address "epistemic incompleteness" where progress depends on engaging with unknown unknowns.

- Existing proactivity approaches externalize epistemic uncertainty rather than representing it. They handle uncertainty as confidence over known variables, not as an indicator of missing dimensions or alternative framings.

Epistemic Grounding

- The paper draws on the philosophy of ignorance to distinguish uncertainty from other forms of epistemic failure, such as error-as-knowledge, tacit knowing, taboo questions, and denial.

- These failures are not captured by probabilistic uncertainty estimates, leading to systematic failures like overconfident action, denial of epistemic discomfort, and suppression of signals that could enable recovery or discovery.

Behavioral Grounding

- The "inverted doughnut" model from organizational behavior research constrains proactivity by role scope and recoverability, but does not address cases where actors are wrong or missing key understanding.

- This limitation becomes problematic for agents, who lack access to the social signals that make behavioral boundaries legible to humans.

Epistemic-Behavioral Coupling

- The paper introduces a two-dimensional framework coupling epistemic legitimacy (what the agent can justifiably claim to understand) and behavioral commitment (the degree of intervention and impact on outcomes).

- This joint space yields four regimes: observation/suggestion, justified action, exploration, and "epistemic overreach" (high commitment under low legitimacy).

- Failures like hallucination, suppressed signals, and runaway commitment can be understood as mis-couplings between knowing and acting.

Implications and Future Work

- Regulating autonomy alone is insufficient; commitment must be the primary control variable, modulated based on epistemic legitimacy.

- Training incentives that reward task completion and coherence over epistemic justification systematically push toward epistemic overreach.

- The paper outlines an agenda focused on representing epistemic signals, detecting legitimacy degradation, and evaluating when restraint is warranted - moving beyond maximizing initiative to calibrating intervention.

- Longer-term directions include agents that can ask questions about unknown unknowns, reason about epistemic trajectories, and actively regulate their own initiative based on real-time assessment of understanding.

Sources: