Story

LORA-CRAFT: Cross-layer Rank Adaptation via Frozen Tucker Decomposition of Pre-trained Attention Weights

Key takeaway

Researchers developed a way to make AI language models more efficient by decomposing attention weights, which could lead to faster and cheaper AI systems.

Quick Explainer

LORA-CRAFT introduces a parameter-efficient fine-tuning method that applies Tucker tensor decomposition to the pre-trained attention weights of transformer models. It constructs a 3D tensor from the attention weights across layers, decomposes this tensor into a core and factor matrices, and then trains only small adaptation matrices on top of the frozen decomposition. This residual-preserving approach allows LORA-CRAFT to achieve comparable performance to methods with significantly larger parameter budgets, making it a highly efficient point on the accuracy-efficiency tradeoff curve.

Deep Dive

LORA-CRAFT: Cross-layer Rank Adaptation via Frozen Tucker Decomposition of Pre-trained Attention Weights

Overview

- The authors introduce CRAFT (Cross-layer Rank Adaptation via Frozen Tucker), a parameter-efficient fine-tuning (PEFT) method that applies Tucker tensor decomposition to pre-trained attention weight matrices stacked across transformer layers.

- CRAFT trains only small square adaptation matrices on the resulting frozen Tucker factors, achieving performance comparable to methods with significantly larger parameter budgets.

- The key innovations are:

- Cross-layer decomposition of pre-trained weights using full Tucker-3 decomposition via HOSVD

- Freezing of all decomposition factors, with only small adaptation matrices as trainable parameters

- A residual-preserving formulation that guarantees exact recovery of the original pre-trained weights at initialization

Methodology

CRAFT proceeds in three stages:

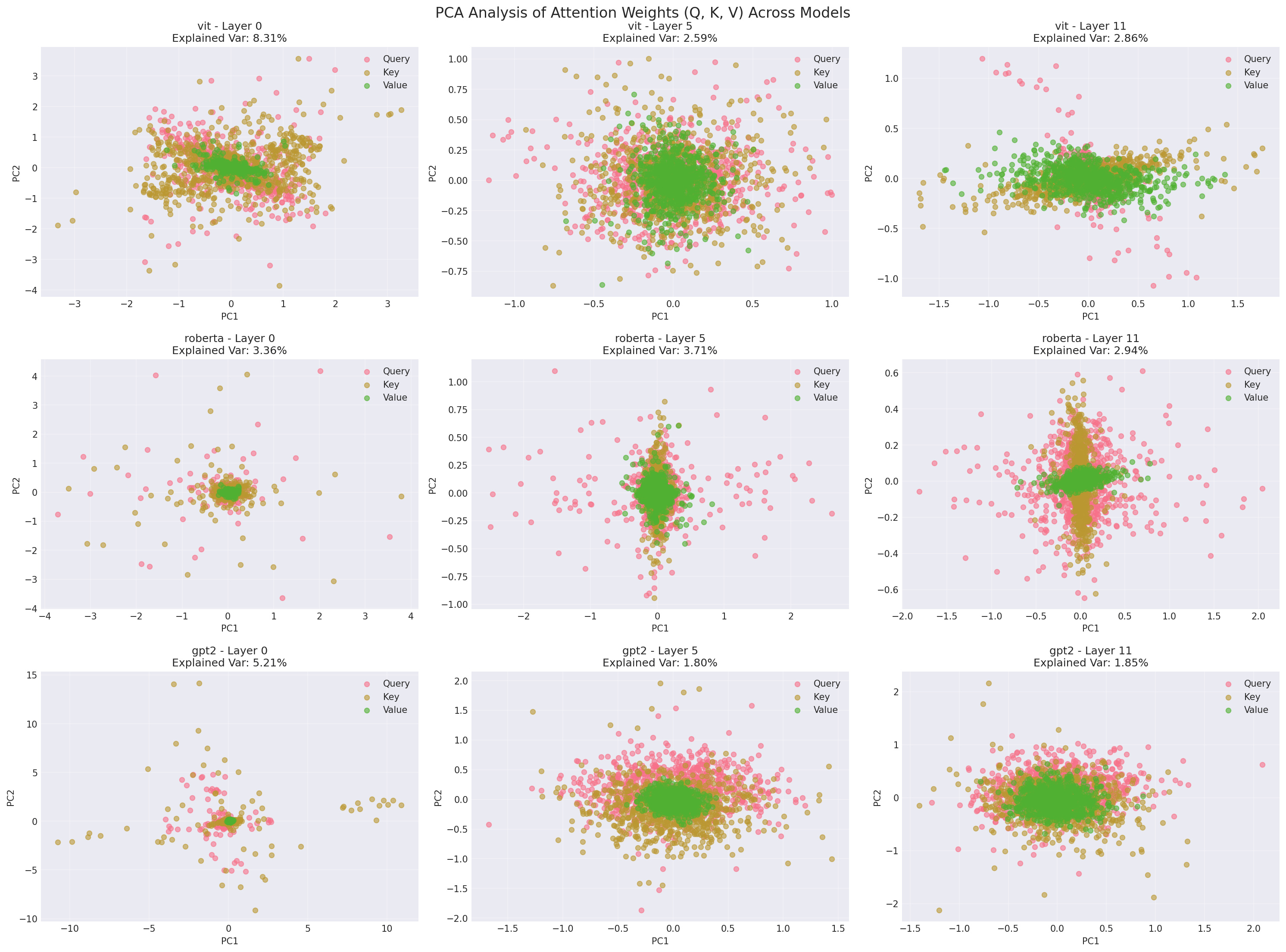

- Tensor Construction: For each projection type (Q, V), the pre-trained attention weight matrices are stacked across layers into a 3D tensor.

- HOSVD Decomposition and Freezing: The Tucker-3 decomposition of each 3D tensor is computed via HOSVD, yielding a core tensor and three factor matrices. All decomposition factors are then frozen.

- Residual-Preserving Adaptation: Small trainable square adaptation matrices are introduced and initialized near identity. The adapted weights are computed as the original weights plus the difference between the adapted and initial reconstructions.

The adapted weight matrices are used in the transformer attention mechanism, while the K and O weights remain frozen at their original pre-trained values.

Advantages

- Extreme Parameter Efficiency: CRAFT achieves accuracy comparable to methods with 7-75× more trainable parameters. The Tucker adaptation parameter count is independent of model dimension and depth at fixed ranks.

- Storage Savings: The pre-trained weights can be replaced by the compact Tucker factors and adaptation matrices, enabling significant memory savings.

- Faster Training: The small trainable parameter space is expected to lead to faster per-epoch training and reduced optimizer memory.

Experimental Results

On the GLUE benchmark, CRAFT with only 41K Tucker adaptation parameters:

- Matches the performance of a 3M-parameter adapter on RoBERTa-large

- Trails LoRA by 2.7 points on RoBERTa-base, reflecting the more constrained adaptation space

CRAFT offers an extreme point on the efficiency-accuracy Pareto frontier, prioritizing parameter efficiency.

Limitations and Future Work

- Evaluation focused on RoBERTa and GLUE; extension to larger LLMs and generation tasks would be valuable.

- The HOSVD pre-computation adds one-time setup cost of O(NL d^2).

- Results are reported for a single seed; variance analysis across multiple seeds is needed.

- Establishing rank-scaling guidelines for significantly deeper or wider architectures is an important direction.