Story

Conv-FinRe: A Conversational and Longitudinal Benchmark for Utility-Grounded Financial Recommendation

Key takeaway

Researchers developed a benchmark to evaluate AI systems that give financial advice, focusing on long-term utility rather than just imitating user behavior, which can be short-sighted in volatile markets.

Quick Explainer

Conv-FinRe is a benchmark that evaluates how well large language models can provide personalized financial recommendations aligned with an investor's long-term financial goals, rather than just mimicking short-term market behaviors. The core idea is to infer the user's latent utility function from their longitudinal decision history, and then assess the model's ability to reason about this inferred utility when generating recommendations. This involves reconciling the user's actual choices with idealized utility-based rankings, market momentum trends, and risk-sensitive portfolios. The benchmark's novel aspect is its focus on grounding recommendations in the user's underlying financial objectives, rather than just optimizing for behavioral imitation or profit-chasing.

Deep Dive

Technical Deep Dive: Conv-FinRe

Overview

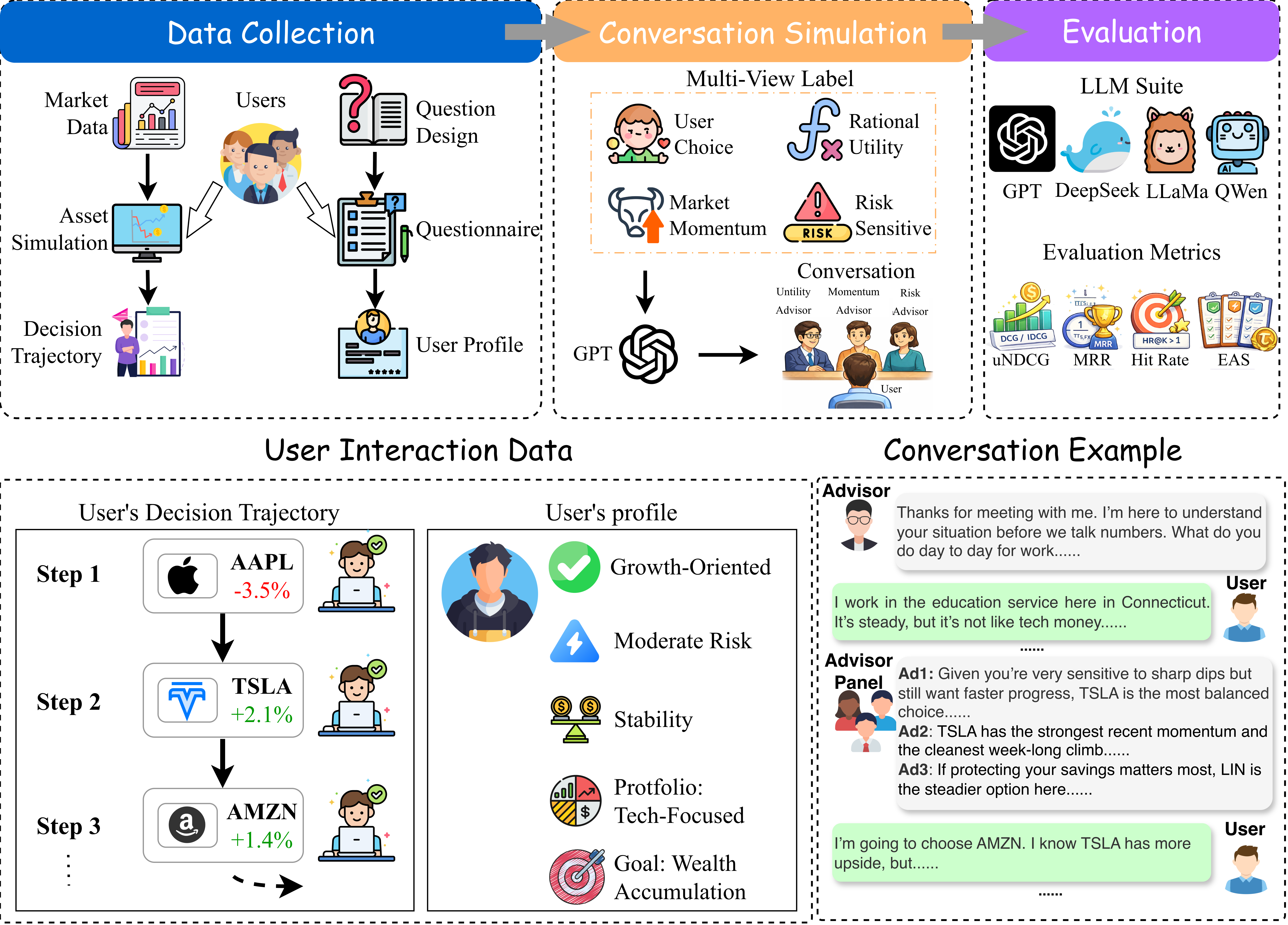

Conv-FinRe is a new benchmark for evaluating large language models (LLMs) on the task of personalized financial recommendation. Unlike traditional recommender benchmarks that focus on behavioral imitation, Conv-FinRe assesses how well LLMs can reason about an investor's latent utility function and provide recommendations aligned with their long-term financial goals, rather than just mimicking noisy short-term actions.

Problem & Context

- Financial recommendation is challenging because investor actions are often affected by market noise, emotions, and shifting constraints, rather than stable risk tolerance or long-term objectives.

- Existing recommendation benchmarks struggle with three key issues:

- Behavior-as-truth: Relying only on relevance signals like clicks or ratings without grounding in utility.

- Utility blindness: Finance datasets often focus on prediction or trading objectives rather than user-specific decision quality.

- Single-view evaluation: Cannot diagnose whether an LLM is reasoning about risk-sensitive utility, blindly chasing market trends, or overfitting user noise.

Methodology

Task Formulation

- Conv-FinRe models longitudinal advisory interactions, integrating market signals, inferred user preferences, and competing expert recommendations.

- The task evaluates LLM alignment across four complementary reference views:

- User Choice ($y_user$): The user's actual selections

- Rational Utility ($y_util$): An idealized ranking based on a calibrated utility function

- Market Momentum ($y_mom$): A profit-oriented ranking based on recent returns

- Risk Sensitivity ($y_safe$): A conservative ranking that penalizes volatility and downside risk

- The LLM must reconcile these competing advisory principles to produce a final ranking that reflects its inferred understanding of the user's financial objectives.

Latent Preference Grounding

- The user's true utility function is not exposed to the LLM. Instead, it is inferred via inverse optimization using the user's longitudinal behavioral trajectory.

- The inferred utility function balances expected return, volatility, and downside risk according to user-specific risk sensitivity parameters.

- The Rational Utility ($yutil$) and Risk Sensitivity ($ysafe$) views are constructed using this inferred utility function.

Data Collection and Conversation Simulation

- The benchmark uses a compact universe of 10 representative S&P 500 stocks, grouped by market beta to ensure balanced exposure to systematic risk.

- User interaction data is collected through a two-stage protocol:

- Static user profiles capturing investor demographics, financial capacity, experience, and risk attitudes.

- Longitudinal decision trajectories from a custom asset simulation tool.

- Structured conversations are generated to instantiate the advisory task, including an onboarding interview and a series of step-wise dialogues.

Evaluation Metrics

- Utility-based NDCG (uNDCG): Measures alignment with the user's latent utility function.

- MRR and Hit Rate: Assess recovery of the user's observed choices.

- Expert Alignment Score (EAS): Quantifies how well the model-generated rankings agree with the Rational Utility, Market Momentum, and Risk Sensitivity expert views.

Results

Overall Performance

- Most models achieve high uNDCG, indicating strong baseline performance in utility-based ranking.

- However, high uNDCG does not always translate into better recovery of the User Choice, revealing a fundamental trade-off between rational decision quality and behavioral alignment.

Expert Alignment Analysis

- There is a strong coupling between Rational Utility and Market Momentum alignment, as high-momentum assets often dominate utility calculations during trending markets.

- DeepSeek-V3.2 demonstrates the most balanced profile, maintaining stable and high alignment with the Risk Sensitivity view while avoiding extreme bias toward return-driven metrics.

- Llama3-XuanYuan3-70B-Chat, a domain-specific model, achieves high behavioral hit rates despite lower expert alignment scores, suggesting that its financial expertise manifests as empathetic alignment with User Choices rather than strict adherence to idealized mathematical formulas.

Preference Discovery Dynamics

- Models show heterogeneous dynamics in how they leverage conversational history to improve utility-based alignment.

- Adaptive Advisors (GPT-5.2, DeepSeek-V3.2) effectively integrate cross-turn preference signals.

- Transaction-driven Analysts (GPT-4o, Llama-3.3-70B) rely more on contemporaneous market information than personalization.

- Behavioral Overfitters (Qwen2.5-72B, Llama3-XuanYuan3) experience degraded utility alignment when history is introduced, prioritizing behavioral mimicry over stable preference inference.

Limitations & Uncertainties

- The benchmark uses a compact universe of 10 stocks, which may not fully capture the complexity of real-world financial markets.

- The simulated conversations, while validated for realism, are still generated rather than collected from actual advisory interactions.

- The inverse optimization approach for inferring latent user preferences has inherent assumptions and potential biases.

What Comes Next

- Expand the stock universe and incorporate additional market data (e.g., fundamental factors, macroeconomic indicators) to increase the complexity and realism of the benchmark.

- Collect real-world advisory dialogues to validate and further refine the benchmark, potentially through collaborations with financial institutions.

- Explore more sophisticated preference modeling techniques beyond inverse optimization, such as multi-task learning or hierarchical Bayesian methods.

- Investigate ways to better integrate market dynamics and macroeconomic conditions into the preference inference and advisory process.