Story

Toward Trustworthy Evaluation of Sustainability Rating Methodologies: A Human-AI Collaborative Framework for Benchmark Dataset Construction

Key takeaway

Researchers created a framework to help generate more reliable datasets for evaluating sustainability rating methodologies, which could lead to more accurate and trustworthy ratings that impact investment and policy decisions.

Quick Explainer

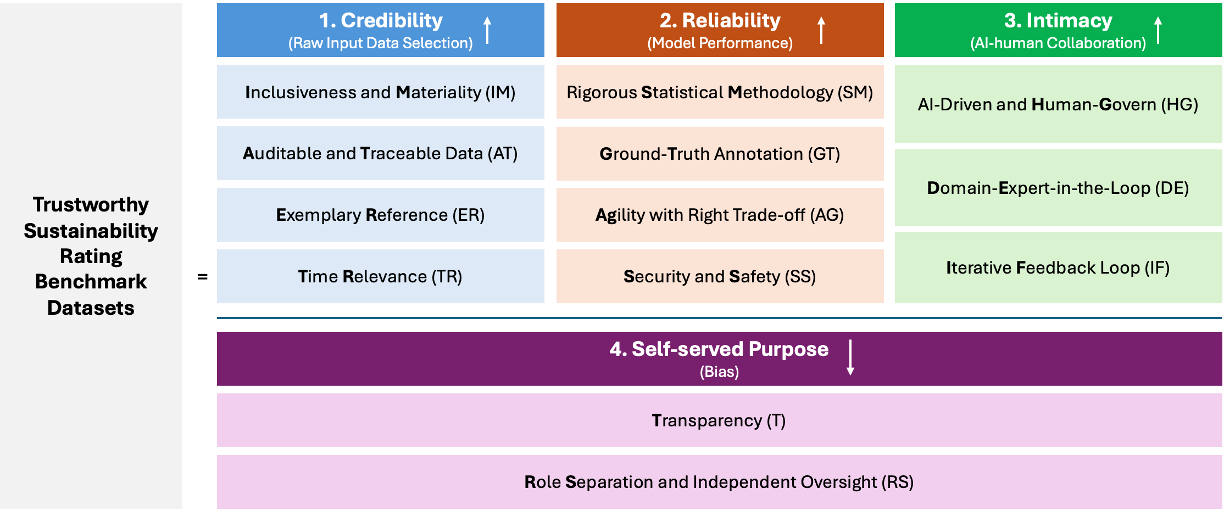

The proposed STRIDE framework aims to establish trustworthy benchmark datasets for evaluating sustainability rating methodologies. It models human-AI trust across four key components: data credibility, modeling reliability, human-AI collaboration, and transparent purpose. STRIDE guides the data selection, annotation, and modeling process to ensure representative, auditable, and rigorously validated inputs. The framework also introduces SR-Delta, a discrepancy analysis procedure that uses the STRIDE-curated datasets to surface insights for potential adjustments to existing rating approaches. This human-AI collaborative approach addresses key challenges in sustainability data and measurement, aiming to enable more reliable and actionable sustainability assessment.

Deep Dive

Technical Deep Dive: Toward Trustworthy Evaluation of Sustainability Rating Methodologies

Overview

This work proposes a human-AI collaborative framework called STRIDE (Sustainability Trust Rating & Integrity Data Equation) for constructing trustworthy benchmark datasets to evaluate sustainability rating methodologies. It also introduces SR-Delta, a discrepancy analysis procedure that uses the STRIDE-guided datasets to surface insights for potential adjustments to existing rating approaches.

The key challenges addressed include:

- Inconsistent sustainability disclosure practices across companies and reporting standards

- Limited high-quality input data for sustainability ratings

- Lack of a reliable, end-to-end pipeline for acquiring, annotating, and maintaining sustainability-related data

Methodology: STRIDE Framework

STRIDE models human-machine trust as a function of four components:

- Credibility: Provides guidance for selecting high-quality, representative input data. Includes:

- Inclusiveness and Materiality: Ensuring broad coverage across sectors, regions, and relevant sustainability metrics

- Auditable and Traceable Data: Verifying the provenance and integrity of input data

- Exemplary Reference: Using high-governance, well-reported companies as benchmarks

- Time Relevance: Ensuring data reflects the current context

- Reliability: Ensures rigor and robustness of the modeling process. Includes:

- Rigorous Statistical Methodology: Achieving sample saturation and representativeness

- Ground-Truth Annotation: Incorporating expert-level human validation and annotation

- Agility with Right Trade-offs: Maintaining adaptability while articulating methodological choices

- Security and Safety: Minimizing risks of harmful or biased outputs

- Intimacy: Guides the design of human-AI collaboration. Includes:

- AI-Driven and Human-Governed: Retaining human oversight and decision-making authority

- Domain-Expert-in-the-Loop: Involving subject-matter experts in data curation and model validation

- Iterative Feedback Loop: Enabling continuous evaluation and refinement of the system

- Self-Served Purpose: Ensures transparency and independence. Includes:

- Transparency: Documenting assumptions, constraints, and limitations

- Role Separation and Independent Evaluation: Delineating responsibilities across system design, implementation, and assessment

Data & Experimental Setup

The authors constructed a STRIDE-guided benchmark dataset for Luxshare Precision Industry Co., a Fortune 500 electronics manufacturer, using its 2024 sustainability report. The dataset achieved an overall STRIDE score of 0.56.

Results: SR-Delta

The authors used the STRIDE-guided Luxshare dataset to compare MSCI's sustainability rating methodology, which had assigned Luxshare a "BB" rating. The analysis surfaced two key discrepancies:

- Ambiguity in disclosure definitions: The STRIDE-guided approach exposed ambiguity in how MSCI interpreted executive pay disclosure, leading to a potential rating adjustment of +1.2.

- Over- or under-penalization: The STRIDE-guided approach identified instances where MSCI's scoring did not align with the underlying evidence, such as overestimating Luxshare's chemical safety management, leading to a potential adjustment of -1.1 to -0.5.

Limitations & Uncertainties

- The work focuses on establishing the methodological framework rather than a fully implemented, end-to-end solution with large-scale validation.

- Some elements of the STRIDE framework, such as the weighting scheme and thresholding rules, are not formally defined.

- The case study with Luxshare represents a limited-scope implementation, and further validation across diverse rating providers, industries, and regulatory contexts is needed.

What Comes Next

The authors call on the broader AI research community to:

- Contribute perspectives and evidence to iteratively refine and strengthen the STRIDE trust equation.

- Participate in large-scale empirical experiments to validate, refine, and strengthen the STRIDE and SR-Delta frameworks.

- Foster open dialogue to develop comparable and actionable sustainability measurement approaches, given the collective-action nature of the problem and the rapidly evolving domain.