Story

A Scalable Framework for Evaluating Health Language Models

Key takeaway

Researchers developed a scalable framework to evaluate how well AI language models can analyze health data and provide personalized medical insights, which could improve patient care and public health monitoring.

Quick Explainer

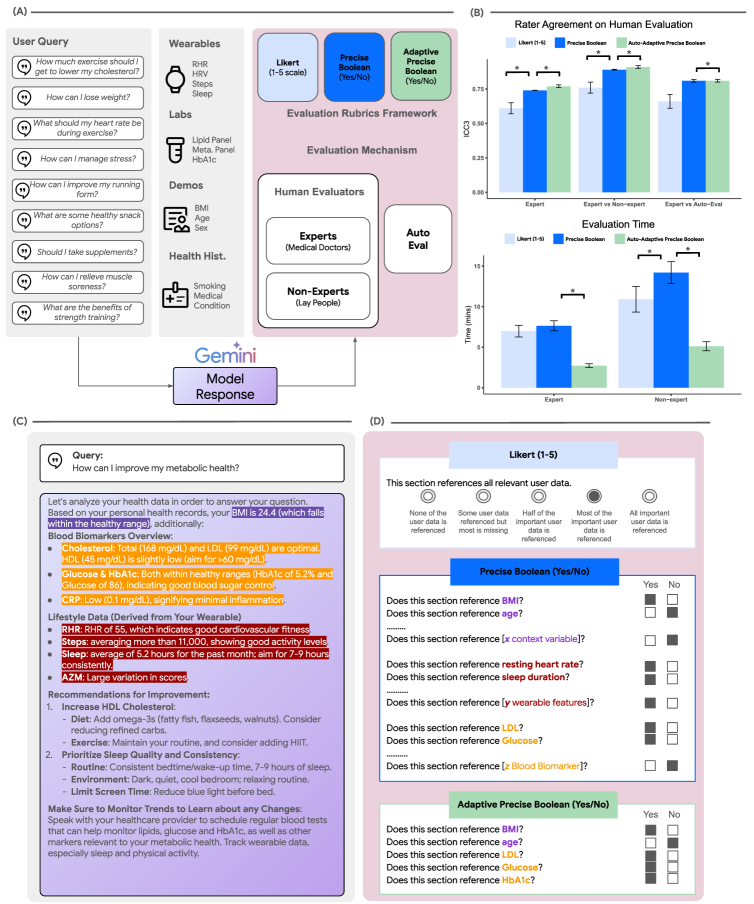

This framework introduces a novel approach to efficiently and reliably evaluating open-ended responses from large language models in the domain of health and metabolic wellness. The key innovation is the use of precise boolean rubrics - a data-driven method to decompose complex evaluation criteria into more granular yes/no questions. This enhances consistency and enables automated assessment. The framework further adapts the rubric set to focus only on the most relevant criteria for each query, reducing the evaluation burden without compromising reliability. Notably, this framework demonstrates heightened sensitivity to detect when language models ignore or mishandle personal health data, a crucial capability for safety-critical healthcare applications.

Deep Dive

Technical Deep Dive: A Scalable Framework for Evaluating Health Language Models

Overview

This work introduces a novel framework for efficiently and reliably evaluating open-ended responses from large language models (LLMs) in the domain of health and metabolic wellness. The key components of this framework are:

- Precise Boolean Rubrics: A data-driven approach to decomposing complex Likert-scale evaluation criteria into more granular, boolean (yes/no) questions. This enhances inter-rater reliability and enables automated evaluation.

- Adaptive Precise Boolean Rubrics: An extension that dynamically filters the full rubric set to only the most relevant criteria for a given user query and LLM response, reducing the evaluation burden without compromising reliability.

- Validation on a large-scale real-world metabolic health dataset, demonstrating the framework's:

- Higher inter-rater agreement compared to traditional Likert scales

- Reduced evaluation time by over 50%

- Ability to detect abnormalities in LLM responses when personal health data is missing or ignored

Methodology

- Transformed Likert-scale evaluation criteria into more granular Precise Boolean rubrics using a data-driven approach

- Leveraged a state-of-the-art LLM (Gemini 1.5 Pro) as a zero-shot rubric question classifier to create Adaptive Precise Boolean rubrics

- Validated the framework on a large-scale metabolic health dataset (WEAR-ME), including:

- Comparing Likert, Precise Boolean, and Adaptive Precise Boolean rubrics

- Measuring inter-rater agreement and evaluation time

- Assessing sensitivity to perturbations in personal health data

Results

- Precise Boolean rubrics demonstrated substantially higher inter-rater reliability compared to Likert scales, despite comprising more evaluation criteria

- Adaptive Precise Boolean rubrics maintained the reliability improvements while reducing evaluation time by over 50%

- The Precise Boolean framework was more sensitive to missing or ignored personal health data in LLM responses, consistently detecting quality degradation, unlike the Likert approach

Interpretation

- The Precise Boolean approach shifts the complexity from subjective human judgment to upfront rubric design, enhancing reliability and transparency

- Automated adaptation of the rubric set further improves efficiency without compromising signal quality

- The framework's sensitivity to data omission is crucial for safety-critical domains like healthcare, where accurate use of personal information is paramount

Limitations & Uncertainties

- The study relied on synthetic user personas, which may not fully capture real-world diversity and nuance

- Potential for annotator bias, despite the reliability improvements, remains a concern that requires further investigation

- Scalability and feasibility of the framework for industrial-scale deployment is an open question

Future Work

- Incorporate qualitative feedback mechanisms to address limitations of binary rubrics

- Explore integrating the framework into model training and fine-tuning pipelines

- Assess robustness against adversarial manipulation of auto-evaluation systems

- Validate the framework on additional real-world health datasets and use cases